Humanoid Robots

Thematic Primer: A Future Secular Story at a Current Cyclical Price

Yes, robots.

After all, it would be difficult to argue that being an avid consumer of science fiction doesn’t translate into at least some edge in tech investing. Over the long term, at least...

Embodied AI has always been the end goal of AI. This is the purest implementation of “using AI to do actual things and increase productivity” that we mentioned in our last article about AI’s Second Phase. Now, it’s clear to us from the trend of investment and technology that robots are more of a “when” than an “if”. However, for the past couple years it’s been a difficult theme to play beyond the compute layer.

Themes are fickle, perhaps you’re a massive genius that knew without a doubt that LLMs and the GPUs they run on would be the future in 2021. And you had to sit through a significant drawdown before AI was even in the conversation related to the price action of MAG7. There’s being early and there’s being wrong, sometimes they look very similar.

So, as with all equity themes we author, we have to proactively answer the question: why now?

Put simply, this theme inevitably concludes in a secular growth story for the companies involved, and a majority of those companies are trading at cyclical trough and experiencing an inflection point. It is currently the case that many of the companies with upside to robotics are trading at a depressed valuation in a brutal auto cycle, with analyst expectations doing what they always do at cycle lows (acting like it’ll be this way forever).

Our Investment Thesis is simple: we see an environment where we can make up a basket of names that will have upside to the proliferation of robots (as they climb up the Industrial → service → domestic ladder). A significant majority of these names currently have estimates that, in true cyclical fashion, assume the cycle will stay the way it currently is forever.

Far from pricing in even a hint of a robot-shaped right tail, they’re not even pricing in an eventual return to growth. This is true to the extent that Texas Instruments (TXN US) felt the need to remind analysts that analog semiconductor cycles do, in fact, bottom.

We’ve spoken at length about the issues present in China-US relations, the automotive cycle, and industrial bottlenecks, all of which have weighed on advanced industrial and machining names.

Still, there’s an overwhelming amount of evidence that robots – both humanoid and otherwise – are going to massively shift the way that work is done and the way we interact with the world over the next decade. We think it’s a bad idea to wait until humanoid robots are doing your laundry to touch the most exposed names in the robotics supply chain just because they’re trading more on the industrial and auto cycle than they do on robots.

It’s only a matter of time before we see brown-field retail and light-industrial sites desperate for robotic workers under $25/hour. And it won’t be too long after that implementation that the tipping point for consumer crossover is reached (around when costs dip below a used Honda Civic, or a Robotics-as-a-Service company provides you a subscription to handle your chores for $500 a month).

We think that’s more of a “happens over the next 3-4 years” thing than a “next decade” thing, but that’s not the core reason we’re writing this piece. Irrespective of that fact,

We believe the tipping point (call it the consumer robot “ChatGPT moment”) is visible. While we were content to wait until after the ChatGPT moment for AI, the risk here is that the auto cycle bottoms first and defense spending increases and by the time that comes we don’t get to take advantage of cyclical prices on bombed out sentiment. So we’re doing the work now and scaling in, we’ll be ready to go full steam ahead when that moment comes soon.

It’s time to revisit the idea of “picks and shovels,” just for a new theme that’s a bit more “hands on” (get it?).

Before we get into the whos, hows and whats of this theme, let’s address the why.

Why Humanoid?

First, they’re a) cool and b) getting pretty advanced.

Early deployments look eerily like the 2010 Kiva-to-Amazon pivot: first warehouses (Amazon is already implementing Digit humanoids today), then all along the supply chain.

For about a year now, GXO (GXO US) and Apptronik have been piloting humanoid robots for pick and place.

I can personally verify that Unitree’s robot is 100% real from experience, despite people who have never visited a single factory claiming they’re CGI.

Others like Neo, Agility, Figure etc. all have some degree of evidence showing massive improvement and early stage deployment contracts or partnerships. The fact of the matter is that Unitree is shipping humanoid robots at $16k a unit, and that makes understanding how they could proliferate pretty easy.

Where’s the technology at?

Did you know that right now, for less than $20k you could purchase 5 robot dogs, write an algorithm and deploy them as a fleet to monitor a large property tomorrow?

Check out NEO’s robot, which looks suspiciously like a guy in a robot suit (but I have been assured is not):

So why the focus on this form factor?

Of course, any robot that is designed for a specific task will be better at it than a humanoid. If that weren’t the case, we wouldn’t have designed robot arms or vacuums.

But humanoid robots matter because the world is already ergonomically tooled for two arms, ten digits, 95-percentile reach. No guardrails to re-weld, no new conveyance track, no retrofitting. Slip one into a BMW body cell and it hits the bolt pattern with the same air ratchet the night shift uses. You want a single robotic device that can wash your dishes, clean your floors and even drive your car? It’s going to be a humanoid.

Take a moment to watch Figure’s robot put away groceries:

This brings us to the final reason why we’re focused on humanoids, one that will probably upset some people working in humanoid robotics. Despite founders largely being techno-idealists with an all-or-nothing vision, the robots don’t have to be fully autonomous.

How do we get to the rosy TAM expectations that have a billion humanoid robots roaming our homes, warehouses and streets ten years from now? The answer to that question is why we’re focusing more on the bridge than the destination when it comes to robots. We don’t think we need to crack AGI and fill every home with an autonomous robot to see the vision of more ubiquitous humanoid robots fulfilled.

Remember Tesla’s Robot Day?

They had a robot serving people drinks and the public was very dismayed to learn it was actually just being operated by a guy. For me, it’s pretty insane that this technology already exists.

Two years ago, along with Inevitability Research, we discussed how AI could disrupt business process outsourcing (BPO)/Call Centers.

Now, let me ask a question: has there ever been an instance of a novel technology that has disrupted the way we work that has not seen a relatively rapid adaptation by humans? The answer, from the loom to Microsoft Excel, is no.

With automation and NVIDIA’s robotics training where it is, one tele-operator in India can likely “coach” four to five robots in the US, intervening when environments are too unique or unstructured and letting the AI take the rest. This could dramatically reduce per-task labor costs in developed markets even before autonomy improves. The economic echo rhymes with 2000-era call-center offshoring, only this time the headset controls a spine.

While perhaps dystopian to some, we see a nearer future that doesn’t have to rely on 2035 TAM estimates. Stepping in when the AI brain isn’t capable, allowing the gathering of data. Working dangerous and menial jobs.

So, sure, maybe humanoid robots are an inevitable future. But so are plenty of things - eventually we will cure cancer! The why has to be followed by a “why now”. There’s three answers - the cyclical landscape, the cost-curve and advancements in AI.

Why Now? The Macro

The reason we like any theme primarily starts from a good macro environment for it. There are hundreds of technological innovations consistently progressing, it’s when you get to buy secular stories at cyclical prices that we really get interested.

The primary component suppliers and beneficiaries of robotics are companies that operate in advanced machinery and manufacturing. They’re largely in automotive and defense industries. Because the auto-cycle has been absolute sh*t while defense has gotten sold on overblown DOGE fears, we see that opportunity here, right now.

The most significant external drivers for companies exposed to the robotics supply chain have prevented any sort of re-rating on the theme despite advancements in embodied AI. These are the automotive cycle, primarily, then fears of fiscal spending cuts and consumer electronics woes.

However, recent commentary from names like RRX, MCHP, ON, and IFX suggest the downtrend has bottomed and the cycle is potentially inflecting to the upside.

The inventory purge is over. With shelves cleared, every incremental unit of demand for sensors, actuators, or inverters drops to the P&L of these primary suppliers.

While trade talks are volatile, the majority of the news flow regarding tariffs has been positive. Tariff policy is still a risk, but CEOs are already pricing in EBITDA-neutral mitigation (see RRX for an example).

It won’t take much for capex oxygen to return. Humanoid robots give us slight flashbacks to a capex-anemic semiconductor industry experiencing the fallout of a massive glut, reinvigorated by a futuristic theme in 2022. As public-market appetite for industrial AI finally strengthens, so will the fundamentals of these companies.

Why Now? The Cost Curve

Picture a Roomba with opposable thumbs and an offshore pilot’s license for the price of a used Honda Civic. We might not be quite there yet, but Unitree’s G-1 humanoid, sticker-priced at $16k represents a 97% slide from the $500k industrial mannequins of 2023.

This signals that robots are finally slipping onto the Moore-style cost curve. We focus on Unitree because the cost they’ve been able to achieve is extremely impressive and a sign that others will soon follow.

Why Now? AI Innovation

We’ve covered advancements in AI at length, from our first primer in May 2023 to our view on Edge AI and Inference-on-Device to our coverage of DeepSeek and our most recent view on how the Second Phase of AI plays out in the years to come.

Compute is growing - better hardware, better algorithms, larger clusters. And that means we start seeing real and tangible improvements due to AI actually being used (not just built). Robots, or embodied AI, are one of the purest examples of this.

Because of the rapid advancement of AI, robots finally have a brain that’s worth the battery power. Let’s be real here: if it were ten years ago and I told you that in 2025 we’d have artificial intelligence, you probably would be really happy that it was going to do all the things you didn’t want to. You might be a bit less excited when you realize that its primary use cases so far are for creative work. Of course, we can see the trend going towards AI satisfactorily completing lots of menial knowledge work, but don’t we also want AI to help us with all the physical things we’d rather not do?

Embodied AI models are all about robots interacting with the real world rather than the digital. And, in the background of LLMs, they’ve made extraordinary progress.

Vision-Language-Action

These multimodal models (Vision-Language-Action models or “VLAs”) are entering a new era with only a slight lag to LLMs. With an LLM, an input of user instructions leads to an output of text. With VLAs, inputs can be user instructions or states that are translated into sub-tasks and actions.

VLA models mean robots don’t have to see, think, move separately - a single (but multi-modal) model now digests pixels and plain-language commands and streams torque targets straight to every joint.

Just as robots have a brain, nervous system and mechanical body (as we’ll go through in depth in the hardware section), they now have a conscious and subconscious mind. Some in the industry have settled on a dual-VLA model, where robots have 1) a fast-thinking and intuitive policy that keeps the limbs balanced and the gripper on target, 2) a slow, deliberately reasoning “scene” model for perception and reasoning.

Figure AI’s Helix is the cleanest example and is already moving sheet-metal on BMW pilot lines using one weight file for every task.

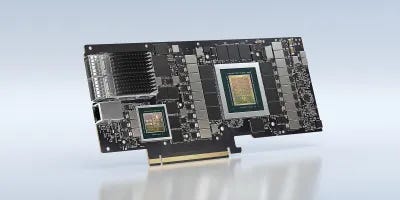

Like LLMs, Nvidia dominates compute. Their Jetson Orin NX already runs at 275 TOPS (tera-operations per second) inside a chip that fits in your palm. The next iteration of Jetson, Thor, triples this.

About two months ago, NVIDIA released GR00T N1, the first open humanoid-scale VLA, bundled with Isaac Sim pipelines that crank out billions of synthetic frames for fine-tuning. This built off of the progress of open-source toolkits such as OpenVLA-OFT, which shave 25-50× off inference latency and add bimanual control that allows sub-second hand-eye loops run on a single Orin NX.

With the release of GR00T, Nvidia announced their partnership with 1X and their pilot study where they trained a humanoid robot to help with the dishes in a single week.

The technology is there and improving every day. The net result of all of the above? A VLA can learn from a YouTube video today, fine-tune overnight in Isaac, and be ready to clock into a factory in a week.

What does this mean in our “AI Phase 2” framework? The moat has moved from hard-coded motion trees to proprietary motion data. Whoever owns the fleet logs owns the flywheel, and the race is on to gather it.

If you’re still with me by now, it seems like you’re interested in the companies that will benefit from this theme. So let’s unravel the value chain behind humanoid robots.