Thematic Primer: Artificial Intelligence, Phase 2

From Infrastructure to Implementation

AI: The Next Phase

Our last update to the AI theme generated some controversy. Some felt DeepSeek would be a “nothingburger” and should not serve as a signpost to position ourselves short in the AI Semiconductor complex. We know how that turned out.

On the other side, the feedback was a bit more interesting. “Yes,” people said, “this is likely a turning point for a market that’s gotten ahead of itself regarding what the growth inside the data center looks like. But it’s not going to accelerate the timeline for agentic AI or deployment of better systems.” We’re going to address that in this article.

The AI-narrative has been an absolute whirlwind since we wrote our initiation on the theme in May 2023. For the past four months or so, we have been more focused on timing when to turn tactically bearish on semis than on preparing for the true implications for the second phase of AI. Now that we’ve managed to do that pretty well, we feel it’s time to add nuance and we formulate a framework for who can build defensible moats not just in but because of AI.

While we felt content in the early innings of the AI trade to remain solely focused on the physical infrastructure necessary for AI (the “picks and shovels”), we’ve felt increasingly that we should expand on our original triphasic framework.

So far, the first phase has been scaling-centric. After ChatGPT’s debut, a moment that embodied the “tipping point” of investor awareness to generative AI, capital flowed primarily towards the foundation models and the infrastructure necessary to scale them. We called this race to LLM commoditization and the hardware revolution underlying it “Phase 1."

The thesis was simple: because these models would scale in a linear fashion with compute power, the pace and magnitude of capex spending would end up beating all current street estimates. We focused on the picks and shovels (Nvidia, SuperMicro, Dell, Broadcom, SK Hynix, etc.), the bottleneck wideners (Credo, Monolithic Power, Lumen, Coherent, Ciena, Constellation Energy etc.) and the enablers (Meta, Amazon, Microsoft, Alphabet, Oracle).

Our Phase 1 AI basket more than doubled over the following 18 months.

On December 8th 2024, at $140, we exited Nvidia entirely for the first time since we laid out the bullish thesis on AI infrastructure. On January 24th 2025, for the first time since 2022, we went short SMH (the semiconductor ETF) and NVDA, which we sized up prior to NVDA earnings and elaborated on in our “DeepSeeking Answers” piece.

Because of this short and our allocation shifts since January 24th, our dynamic AI basket outperformed our original AI Infrastructure basket and avoided a 20%+ drawdown in the first quarter.

The reasoning going forward for pivoting is even simpler: All shortages lead to gluts—and now the AI revolution is happening in a world of cheap inference and zero marginal compute constraints. If Phase 1 was about who could get a GPU, Phase 2 is about who knows what to do with one.

As we stated in January, our near-term bearishness on NVDA did not mean we are bearish on AI. In fact, we’re more bullish than ever on the advancement and adoption of the technology. Rather, in the near-term, we felt that the AI infrastructure trade had gotten so crowded that catalysts with even slight potential to be negative posed a risk.

We have covered our NVDA/SMH short, but more importantly, we believe it is time that the opportunities for alpha expand beyond just the physical infrastructure necessary for AI.

We’re unconcerned with questions like “when will we reach AGI?” and much more concerned with “when will companies begin seeing a durable benefit from utilizing AI?”. As the AI conversation inevitably shifts from the "what" of capabilities to the "how" of reliable implementation, the companies implementing this infrastructure will move from being perceived as tangential to the AI revolution to becoming its primary beneficiaries.

LLM scaling is great, and as it becomes commoditized it’s important to realize that the model doesn’t matter if no one sees it. The AI layer that wins is the one closest to the user, not the one buried deepest in a cluster. We’ve seen this movie before: Apple charges $100 for $20 of NAND because it owns the interface. In Phase 2 AI, whoever utilizes the model wins the margins.

We have long held the view that AI would progress according to the hype cycle, that the market would do what markets do. It would cycle between discounting reality and promises, while the entire time this technology would progress. Paying attention to that progression, regardless of how the market is perceiving it, is key to knowing where the next set of opportunities lie.

While we’re aware that the recent volatility has made investors much more concerned about what Trump’s next headline is than how AI progresses, we hope that our credibility on the AI trade thus far allows us to present you with some hope: AI is still the defining technology of the 21st century.

Before we get any further into it, a quick refresher: our original framework for Artificial Intelligence laid out three phases (not necessarily linear in time) that would occur.

The first was the easiest - in order to do AI as it existed, you needed more GPUs in more data centers. In the first half of 2023, that was a no brainer. It didn’t require much more thought, current estimates of AI Data Center capex were too low and needed to go up. The chart of our view, simplified, could have been summarized as:

Now that the foundational infrastructure build-out is well-underway, the center of gravity is shifting toward AI applications and monetization.

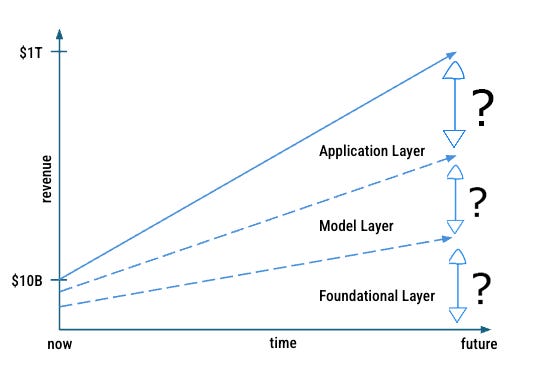

The generative AI opportunity beyond the physical infrastructure layer will accrue to either the foundational layer, the model layer or the application layer. If we believe this grows to become a trillion-dollar opportunity as bull cases would have it, the question essential to investors is “from here, where are the bulk of those dollars going?”.

Compute will continue to scale, but as an investment thesis that is no longer “easy.” Foundation model providers will face increasing commoditization pressure and open-source competition, which is great for the actual value created by AI.

Nikesh Arora, CEO of Palo Alto Networks, put this well:

“For the tasks where we’re getting efficiencies and driving lower costs, I don’t think the model’s IQ needs to be much higher than it already is…and I don’t want to pay a dollar for that. I’d rather pay five cents”

How big is the spread between the foundational layer and the model layer, or the model layer and the application layer in terms of the dollars spent on AI? It’s difficult to predict right now, but it seems that in the next 1-2 years, the narrative (and revenue growth) will be dominated by companies that turn AI capabilities into tangible products and productivity.

The second wave of AI investment, the transition from “picks and shovels” to the actual mining of gold, is underway. With it, we’ll get a more sober focus on monetization and agentic use cases. I’ll put our thesis as simply as possible:

Thesis: The winners of Phase 2 AI won’t be those building agents, but those with the preconditions to make them useful.

Framework: Data + Distribution → Design → Deployment

It is precisely because this phase of AI is more difficult than the first that the next six months of this trade will be a complete knife fight. So, this probably won’t be much like any AI basket you’re familiar with thus far.

Here’s how we’re navigating it: