Thematic Memo: Inference on Device

Positioning for Edge AI in Phase 2

I wanted to expand a bit on the brief discussion we presented regarding Inference at the Edge in our piece on Interconnects as it relates to mobile devices, as well as check in on our original thesis from June 2nd about going long AAPL for AI and selling Google.

Apple is up more than 19% in the 5 weeks since we wrote about that swap, while Google is only up ~7.5%. But the reaction of Apple to their WWDC presentation, specifically to the Edge AI aspect, has had me thinking about what Phase 2 looks like at the edge and on mobile devices since.

We touched a bit on this in our Interconnects piece as well.

Here’s what we spoke about last week:

Interestingly, large language model inference - the process of using a trained model to make predictions or decisions based on new data - can sometimes be considered general compute, as they are based on ad hoc, single instance-based logic operations. Currently, inference often occurs in the same data centers as training, which can be inefficient.

This brings us to an important distinction in how data is processed and transferred in different computing scenarios, which directly impacts the types of interconnects required.

Hyperscale GPU clusters utilize a parallel computing model called SIMD (Single Instruction-Multiple Data) operations, akin to a WW2 B-17 assembly line.

In this model, the same instruction is executed simultaneously on multiple data points.

Inference, on the other hand, typically involves processing smaller amounts of data, often in real-time. In this sense, inference can be contextualized like the work of a Swiss watchmaker, emphasis is on speed and meticulous precision rather than scale. Each inference request is unique and requires careful handling to ensure accurate and timely results.

Given these differences, it's becoming increasingly clear that the one-size-fits-all approach of using the same data centers and hardware for both training and inference may not be optimal. Instead, there's a growing trend towards specialized hardware and edge computing for inference.

Indeed, we may eventually see new specialized hardware (ASICs) for training. But ASICs for Inference are already a reality (see: Groq, EdgeCortix) not a very commercially viable one right now. They need to be better than NVDA solutions specifically for inference while being more economical (Groq, for example, passes the first test while miserably failing the second).

I’ve found myself talking more and more about the consumer side of AI, rather than the business side or the data center side (which I still find myself speaking about a lot).

I had a long conversation this weekend with two friends in which I evangelized the needs for inference at the edge.

They’re both professionals, he is an engineer and she is a data scientist. They were talking about how nobody will need an honest to god AI device. They thought that was silly.

I asked for my buddy’s iPhone to demonstrate something. I texted a friend “we’re coming over” and we headed out.

When we got into the car and their phone connected to CarPlay, it immediately gave a notification for directions to that friend’s house.

I pointed it out and said, “Your iPhone knows where you’re going. it knows what you’re doing. It knows who your mom is. Do you want that kind of convenience to be aided by AI? Do you understand how powerful it’ll be to have an AI assistant that understands your whole life - what you did last week and what you’re planning on doing over the next month?”

“Yes”, he said, hesitant that I was definitely leading him into agreeing with something he’d been disagreeing with all night.

“And do you want that deeply, deeply personal information to ever end up on a server in Cupertino instead of right here on the local storage of your device?”

“No…of course not…”

“Okay, so Apple is going to train models in their big data centers to specify themselves to your life. But the data remains on your phone. Now do you agree with why we have to do inference for the next generation of AI assistants at the edge, on your device?”

I converted him.

So I’ve been brainstorming a bit about where the risk/reward is now that Apple is pricing in significantly bullish outcomes.

This afternoon, I’m more bullish on this use case than anything else. I don’t want to bring a timeframe up so let’s say it’ll either be the iPhone 16 or 17 (although this will be a distinct reason for changing the version convention. Maybe we switch to iPhone alpha…the numbers are getting old). That phone will be doing inference at the edge and integrate with Apple’s specialist and large language models. It will be absolutely necessary. And Apple’s head start with their walled garden ecosystem will be HUGE.

This will drive the first truly massive replacement cycle for the iPhone in the last 7-8 years (at least).

Yes, I’ve been saying this since the end of May. And yes we bought a chunk of Apple in the AI basket that’s up nearly 30%. The reason I’m thinking about this is twofold:

I think this defines a huge portion of what I have termed Phase 2 AI Beneficiaries. We are running back the picks and shovels playbook but now the mine (or the gold? I don’t know it’s way too early and too Monday for analogies) is the edge.

People keep messaging me telling me they missed the run in Apple because they were too lazy to read the article when it first came out and now they want to do that thing where they buy the other names. To be clear - the biggest beneficiary of this will be Apple. But we can still explore.

Apple has always been serious about privacy, and Inference at the Edge for AI/ML will mean that strategy will see the fruits of its labor multiply significantly.

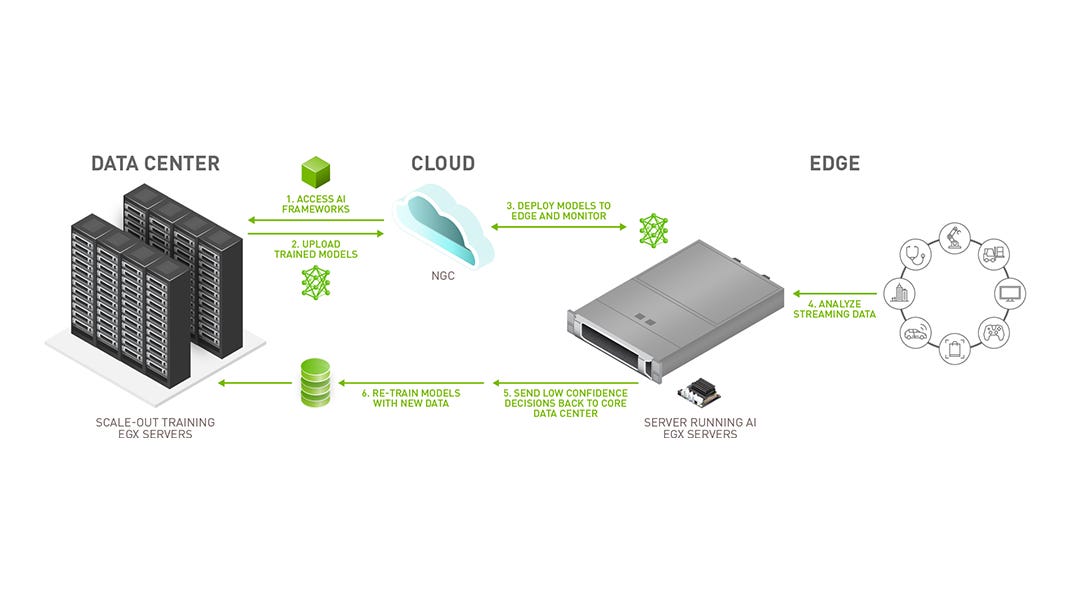

Here’s an example of how something like Federated Learning works:

Edge AI still needs those models which are trained by NVDA GPUs, of course…

There’s many ways that this technology can advance to ensure that we can have AI agents that know everything about us without it also meaning we have FBI agents that know everything about us (I personally feel we have probably already lost that war and it might be worth considering that we should just maximize our own gain for having completely lost our privacy BUT that’s not the consumer’s default position…I digresss…). And Apple is at the forefront, which has been pretty rapidly priced in (or at least begun to be).

I love it when the market recognizes a view I’m expressing is more correct than what’s priced in, but I also recognize that when the market evolves to take my previously-different view as a base case the risk reward becomes less asymmetric.

So I’m looking at the other areas that benefit from an AI driven replacement cycle as well as Edge AI proliferation.

We have went into what edge AI looks like in many of our previous articles beginning in December 2023. We also just explained why inference is a lot different (with different computing requirements) than training. Now let’s explain what the picks and shovels of the “Intelliphone” are.

…

Never mind, I’m never using that word again Im going to stick with “AI edge devices” so I don’t feel like a total loser

…

I’m looking at other areas that will benefit from the iPhone as an AI edge device. It doesn’t necessarily have to be providing the AI (inference), it just has to benefit from that replacement cycle.

Why do I think this will be such a massive replacement cycle?

When I look at the key announcements related to AI and edge computing from WWDC 2024, my instinct is that the next device will be purpose built for Edge AI.

And I think that will end up reducing some backwards compatibility that has made the replacement cycles less impressive recently. To review, here’s the AI features Apple discussed at WWDC (generated by perplexity) - which I believe will rapidly accelerate in the next models:

Apple Intelligence: Apple unveiled its new personal intelligence system called Apple Intelligence, which integrates powerful generative AI models into iPhone, iPad, and Mac devices. This system combines on-device processing with cloud-based capabilities to deliver personalized and context-aware intelligence.

On-device AI processing: A cornerstone of Apple Intelligence is on-device processing, which allows many AI models to run entirely on the device, ensuring privacy and security.

Private Cloud Compute: For more complex AI tasks requiring additional processing power, Apple introduced Private Cloud Compute. This technology extends the privacy and security of Apple devices into the cloud, allowing for more advanced AI capabilities while maintaining user privacy.

AI-powered features: Apple announced several AI-enhanced features across its operating systems, including:

-Image generation and text summarization in native applications

-Enhanced Siri with better app control and understanding of user input

-AI-powered photo searches, object removal, and transcriptions in the Photos app

Email summarization and response generation

Custom emoji creation (Genmoji) and AI image generation (Image Playground)

ChatGPT integration: Apple is integrating ChatGPT access into iOS 18, iPadOS 18, and macOS Sequoia, allowing users to leverage its capabilities within the Apple ecosystem.

Privacy focus: Apple emphasized its commitment to privacy in AI, with features like IP address obscuring and no request storage when accessing ChatGPT.

Apple Intelligence will be available on iPhone 15 Pro, iPhone 15 Pro Max, and iPad and Mac devices with M1 chips or newer, highlighting the importance of powerful edge computing capabilities for these AI features. I think by the next generation, the capabilities will be night and day. iPhone 18 probably is where we get the real deal.

The most striking difference between someone of average intelligence and someone of remarkable intelligence isn't their ability to complete tasks… it's their ability to complete tasks quickly and adjust behaviors dynamically. AI models of the future won't simply be about inference, or forcing variable inputs through the meat grinder that is a transformer or CNN model, but they will also be training on the fly, fine-tuning their local versions of more complex, yet smaller and more specialized sub-models, creating a model of models.

This cascading waterfall of ever more efficient but narrow-minded layers alleviates two of the biggest concerns in model deployment, memory and storage requirements, but brings out the bogeyman that is latency. Latency is generally defined as the time needed to complete a call and response, a rather linear measure that is clearly measurable. Perhaps, though, as our models become more myopic as our needs become more specific, we need to think about latency exponentially and combat this order of magnitudes increase in dimensionality by taking collocation to the absolute extreme.

The reality, right now, is that models of this type exist and, in fact, are preferred by researchers for complex tasks. The complexity of them is not even limited by our understanding of machine learning or data science, but still our finite and scarce compute resources. Remember, this isn't about simply making models bigger so simply more RAM isn't going to do the trick, but miniaturized super computers will become the norm where specialization (serialization) and parallelization work in concert to turn that LLM meat grinder into a surgeon's scalpel to extract only the information needed using the bare minimum tools required. To manage this though, high performance logic silicon will need to be placed physically alongside low-latency memory, lightning fast storage, and a hint of acceleration.

This approach aligns perfectly with the goals of edge AI and on-device inference, particularly in the context of Apple's potential next-generation iPhones. Apple's vertical integration and custom silicon design put them in a unique position to implement this "model of models" approach. Their A-series chips, with specialized neural engines and tightly integrated high-bandwidth memory, could support these complex, layered AI systems.

If Apple can successfully implement this type of AI system in its walled garden before or better than competitors, it could represent a significant leap in smartphone capabilities. This could potentially trigger a major replacement cycle, benefiting not just Apple but also its entire supply chain. If it doesn’t and it gets beat to the punch, or users care less about Apple’s track record in privacy and their trust in the brand (not my base case) we can reduce our risk by owning the beneficiaries of the edge AI changes regardless.

According to IDC forecasts, AI smartphone shipments are expected to surge over 200% year-over-year to 170 million units in 2024, accounting for approximately 15% of total smartphone shipments. This rapid growth is anticipated to accelerate beyond 2024 as industry players aggressively push towards more advanced chips and evolving use cases. In the Chinese market alone, AI phone shipments are projected to reach 150 million units by 2027, representing a 4-year CAGR of 97% from 2023 levels.

The transition to AI smartphones necessitates significant hardware upgrades, including more powerful SoCs, increased DRAM, enhanced microphones, advanced cooling systems, and improved cameras. This shift is likely to result in larger bill of materials (BOM) costs for manufacturers.

For instance, while current high-end smartphones typically feature 8GB or 16GB of DRAM, next-generation AI smartphones are expected to require a minimum of 16GB, my estimates would be even higher. We will need more memory on device - but we will get it - hardware advancements, coupled with the integration of on-device AI capabilities, are expected to provide compelling reasons for consumers to upgrade their devices, potentially triggering the "first truly massive iPhone replacement cycle in a decade" that industry observers have been anticipating.

That is my thinking this afternoon.

So I’ve begun going through my Monday brain - cui bono (besides Apple)?

We’ve been throwing this back and forth while Apple rallied and I’ve had many side conversations, so it’s time to consolidate them and publish my musings. Now let’s get into the tickers.

Paid subscribers get access to our Edge AI basket and a review of our current exposures that benefit from this next phase of Artificial Intelligence in equity markets.

Naturally, we’ll start in Taiwan.

In our portfolio, we own Foxconn/Hon Hai (2317 TW). We own Quanta Computer (2382 TW). We own Wistron (3231 TW). We sold Pegatron (4938 TW) on other concerns that, in my opinion, are still valid. We own TSMC (TSM US). We own Mediatek (2454 TW).

Many of these names have doubled, tripled or even quadrupled since we first bought them.

I don’t think they’re done, but let’s try to find some more asymmetric opportunities. I believe in Taiwan the answer is simply TSMC, but there’s some other areas worth looking at for this thesis.

Packaging is certainly worth another look in ASE Technology (ASX US).

It’s a bit of a stretch but we might also consider Catcher (2474 TW) in Taiwan. Why? Well, since our thesis isn’t just “beneficiaries of making Edge AI capable iPhones” but rather takes the eventuality that Apple will accomplish Edge AI capable iPhones and assumes they drive consumer behavior - our final thesis shifts our focus more to “beneficiaries of the first truly massive iPhone replacement cycle1 in a decade”.

WPG Holdings (3702 TW) and Simplo Technology (6121 TW) also seem solid ok for this, I’ll explain that in a bit.

Let’s shift over to China.

We just bought LuxShare on our Interconnects/Chinese ASICs thesis. Perhaps we should buy GoerTek (002241 CH) as well?

Sunwoda Electronic (300207 CH) and Desay Battery (000049 CH) are also interesting. Perhaps not for Apple’s devices if they feel they should still prioritize shifting away from the Chinese supply chain vulnerability rather than get to market with this relatively quickly as it will be insanely sticky and might be the first time in a while that Apple should really focus on getting the first mover advantage.

AAC Technologies (2018 HK) could benefit from increased need for cooling to adapt these devices to being capable of Inference.

Korea? Well, I have been framing this often along the lines of LPDDR packaging. Apple has taken such control over their silicon pipeline (they bought the company that now is their GPU division like 15 years ago) so the slack along the value chain is harder to find.

There’s some good overlap with AI Data Center here though, and we already own SK Hynix (000660 KS). I think you should own Samsung (005930 KS) for the memory aspect until the AIPhone (nope, still cringe) device comes out because nobody is actually going to believe me when I say that Apple is going to steal significant market share until it actually happens. That is in fact my thesis currently, though, so there’s a little bit of risk there.

What are the setups in Japan? They’re primarily in RF.

I would like to own Murata Manufacturing (6981 JP), and I’m going to buy that. TDK Corporation (6762 JP) & Taiyo Yuden (6976 JP) are also high up on my list.

We already own Renesas (6723 JP).

On the SoC side, Socionext (6526 JP) MAY be worth a second look. But we nearly top ticked it when we exited it in our AI basket so I think I will sideline that one for now.

In the land of the free and the home of the SMH multiple (the US):

We own Broadcom (AVGO US), Micron (MU US), Western Digital (WDC US) and Qualcomm (QCOM US). We own Intel (INTC US) and we own Teradyne (TER US).

We will go long Corning (GLW US) as a replacement cycle beneficiary.

We’ll go long Analog Devices, primarily because they own Maxim Integrated and there’s going to need to be a cracked battery in that phone if there’s ANY training whatsoever going on at the edge (same thesis as Simplo and other Asian battery names previously mentioned).

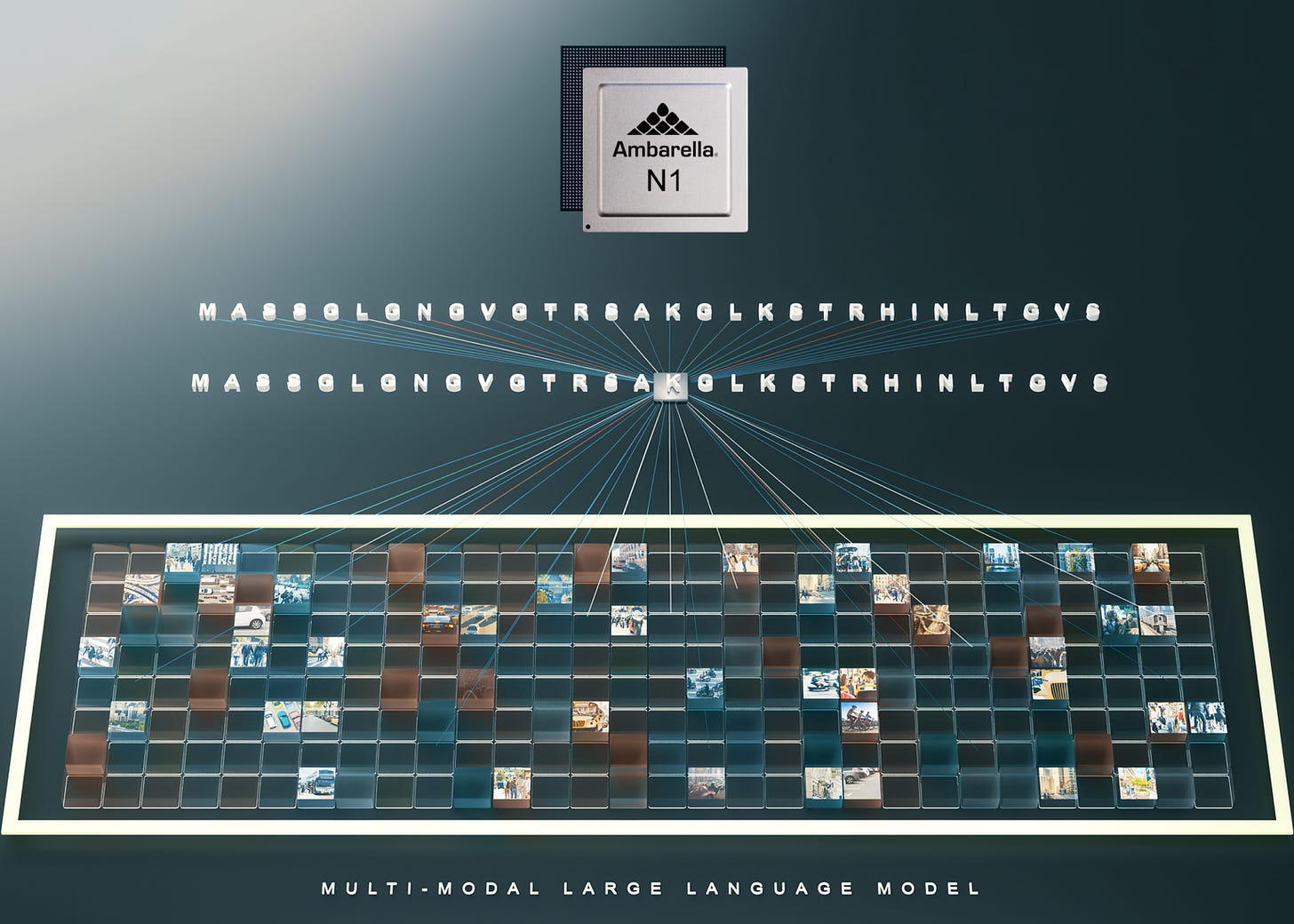

How about QRVO? Or maybe even Skyworks (SWKS)…are they still an iPhone supplier. I think you own QRVO. SWKS I’m less excited on but if you’re doing a basket it may as well be in there. With their two-decade old focus on fitting long strings of serialized data through interconnects like HDMI, Ambarella (AMBA US) is doing some interesting stuff at the edge:

We already own Amkor (AMKR US). which is considered to have some of the smallest packaging technologies in the world. Every nanometer counts when trying to fit a series of components onto a chip and into a device such as an iPhone, so we see significant investment in this space as likely.

Would this replacement cycle be meaningful for companies like AT&T (T US), Verizon Wireless (VZ US) and/or T-Mobile (TMUS US)? Not by as much as the ones previously mentioned but I would not exit them here if I currently owned them. I think there will be an incremental improvement in top line growth.

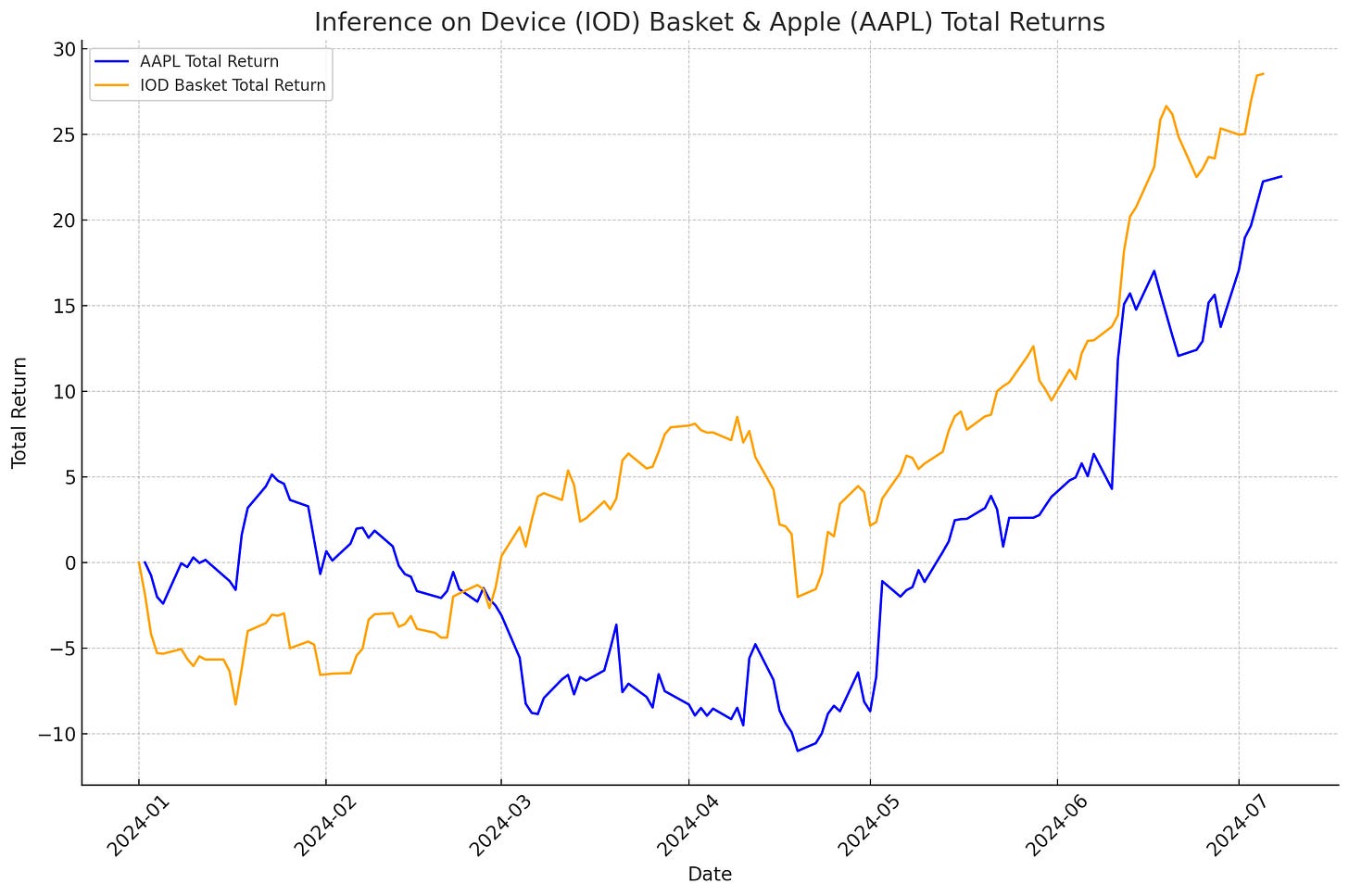

Our prospective “Inference on Device” Edge AI basket has had much the same reaction as AAPL did to the WWDC. While I’m still deciding exactly how to implement this in our core portfolio given our existing exposures to AI and the fact that we’ve already done some portfolio optimization relative to Edge AI in Phase 2, you can view what I’d consider our Edge AI/AAPL Inference on Device Basket here:

Overall, I’m just tracking my thought process - I will likely begin to add these names to the AI basket and may review again to see if we’re exposed to any areas of the theme that are beginning to slowly lose their asymmetry as we progress.

I think if anyone is going to get it right, it’s Apple and I believe the first wave is small models (specialist models) doing all sorts of small quality of life things, which gets people comfortable with large models doing more autonomous things.

I would guess that the biggest risk to this thesis is that early iPhone 16 shipments disappoint on what will likely be lofty expectations by the time we arrive at that milestone. However, if that happened I would expect later 16 or early 17 numbers would smash it out of the park…basically people will need to be convinced the utility. I want to hear more people making comparisons to being left behind and…well…Gattaca.

Siri et al really set a bad precedent: something like > 95% of all Alexa commands are setting kitchen timers, which is also basically all I use Siri for (and weather).

Once we see these improvements though, people who have never ventured to use ChatGPT will quickly realize the power of AI and drive the next wave of adoption in B2C vs. B2B where applications are currently concentrated. Another potential risk is that these AI models are unlikely to be on Chinese phones, which makes up about 20% of AAPL’s current market.

Still, I believe that by diversifying into the “picks and shovels” overall of Edge AI and Inference on Device, we reduce those AAPL centric risks.

If you’re asking yourself “what’s a replacement cycle?”, I will explain it but also feel you should probably improve your ability to use context clues for reading comprehension. A real replacement cycle is when the new iPhone looks a lot less like the delta in technology between iPhone 14 and 15 and looks more like “Apple released a phone than has an AI assistant and you can’t effectively use the last model for it” which ultimately results in upgrading having a lot more marginal utility to the customer. Not to mention the massive peer pressure.

What about $ENVX as a play on needing battery life for AI on smartphones?

If you use "aiPhone" it's probably like 57% liss cringe