Stargate: A Citrini Field Trip

Free Full Article for Citrini Subscribers

Back in September, we released to CitriniResearch paid subscribers our deep dive into the largest and most comprehensive of the Stargate Data Center projects - Abilene. We’ve removed the paywall and made the article available to all subscribers, including our baskets (which we’ve hosted on Plutus for ease of access, for those who aren’t currently subscribed to the Citrindex portal).

If you’d like to get our articles in full as soon as they’re published, consider upgrading to a paid subscription:

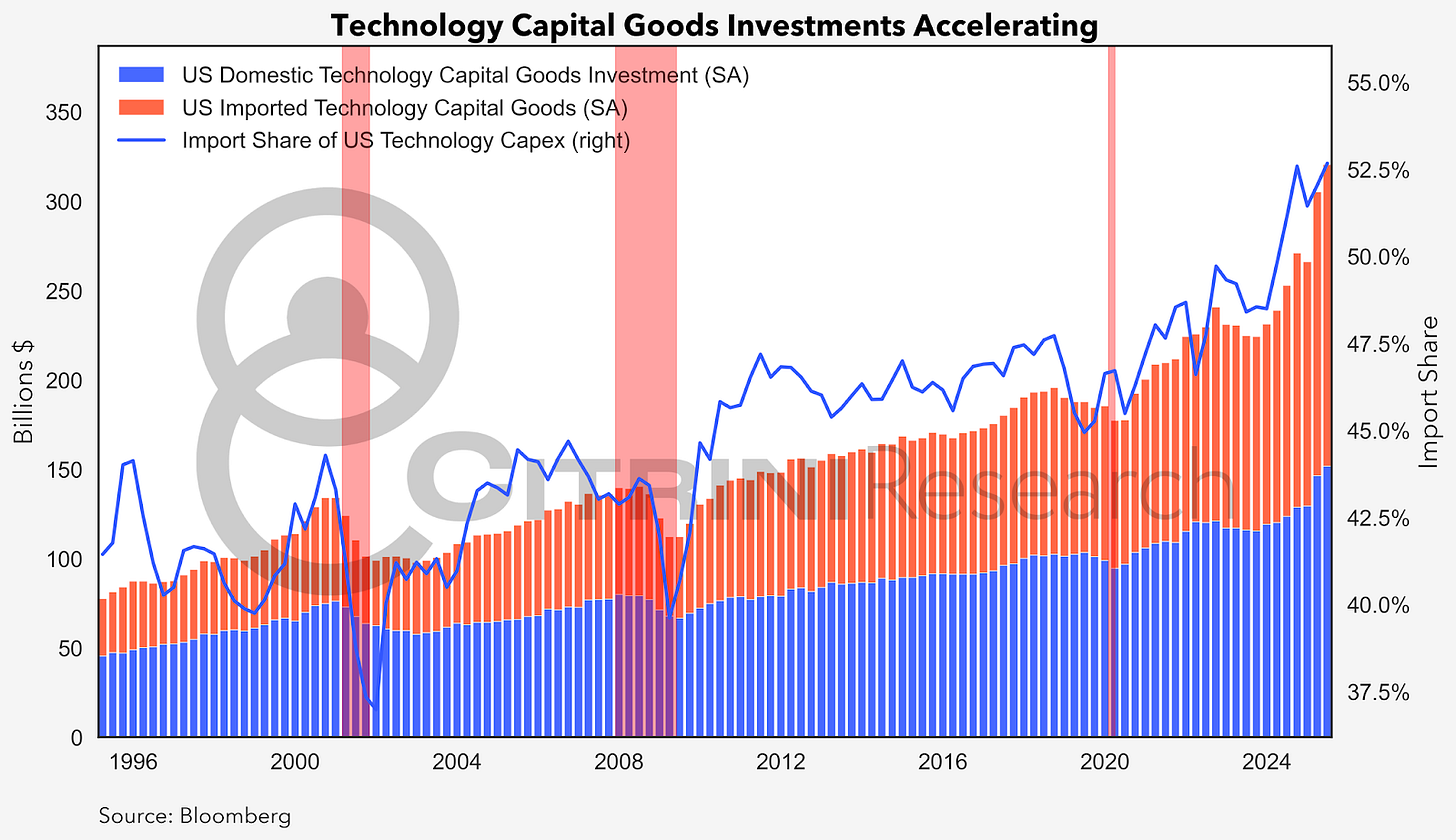

Sometimes, seeing is believing. CitriniResearch has been an early and vocal believer in the scale of the data center buildout necessary to implement and realize the full potential of Artificial Intelligence. In our inaugural AI primer, we dubbed Phase 1 of the AI Trade the “Global Data Center Hyper-Scaling” - a tongue-in-cheek reference to both the hyperscalers involved and the coming scale of the buildout. More than two years ago, we wrote:

“The first phase [...] is centered around the development and build-out of robust computational infrastructure. This involves a massive and sustained tailwind to the suppliers of data center equipment of accelerated computing required for both AI training and inference”

“The enormous computing power required for AI/ML places considerable demands on a variety of technology sectors. The companies involved in providing the necessary infrastructure stand to benefit significantly from this heightened demand. This includes businesses involved in semiconductor manufacturing, networking equipment, data center construction and management, and more.”

Since then, we’ve spent our time in front of our screens, watching green numbers climb higher across the supply chain involved in this massive effort. But there’s a risk of failing to appreciate it that is inherent to that; sometimes you just have to bear witness.

Screens make it easy to forget the physical world behind the numbers. Chips don’t float. Fiber doesn’t spool itself. Power doesn’t magically appear at the edge of the rack. At some point, you have to step away from the chart and go stand where the megawatts meet the mud. So, we went to Abilene, Texas. Or, at least, we sent a drone to Abilene, Texas with the help of investigative journalism firm Hunterbrook Media (who we partnered with on our Teradyne (TER) thesis).

Maybe I’m slow to the uptake but, for me, seeing the progress there really drove home the scale of what’s going on right now.

Roughly a year ago, there was dirt.

Now, it looks like this:

On the ground level, nine months ago, it looked like this:

Today it looks like this, and this is just one section:

These images may seem mundane, but one has to consider that they represent just a tiny fraction of the datacenter development breaking ground across the country. This single facility represents 600 football fields filled with cables, fiber, pipes, generator sets, transformers, chillers, switchgear, cable trays, fuel tanks, batteries, copper busways, electrical components (2N redundant), pumps, air handlers, fire suppression systems, all the fancy actual computing and cooling gear inside the data halls and 200,000 tons of other equipment that collectively consume enough electricity to power the city of Seattle and must reject a level of waste heat 24/7 that creates thermal plumes visible on weather radar.

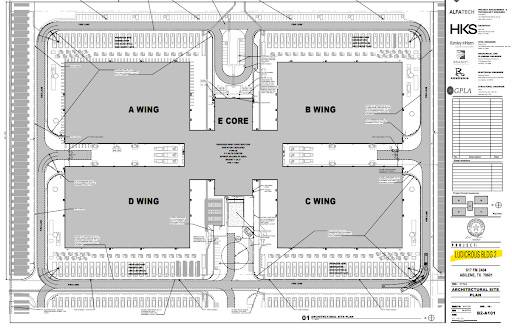

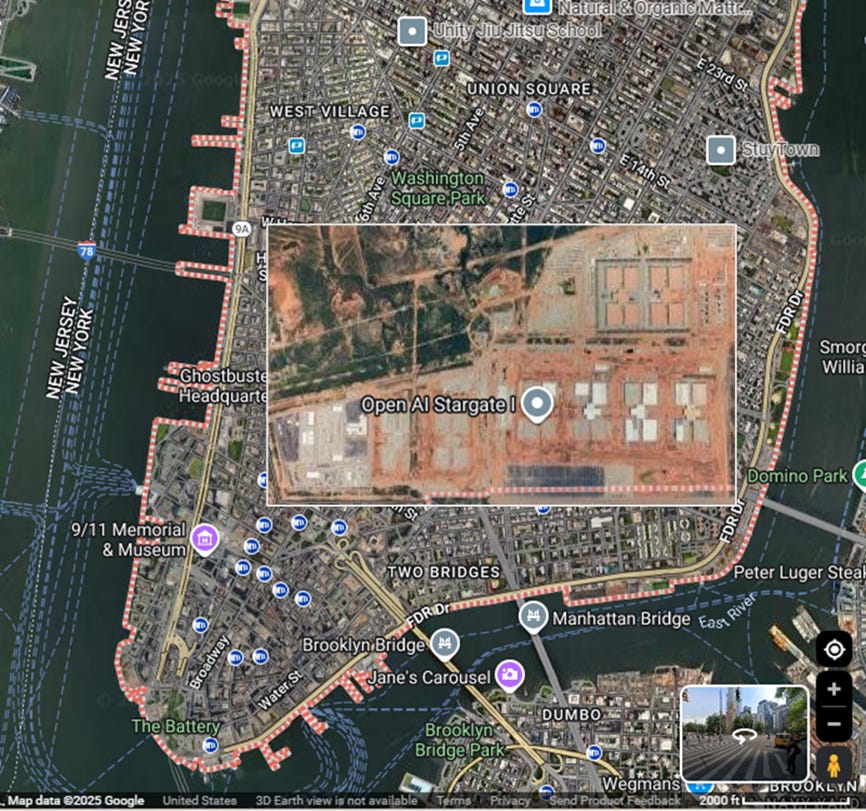

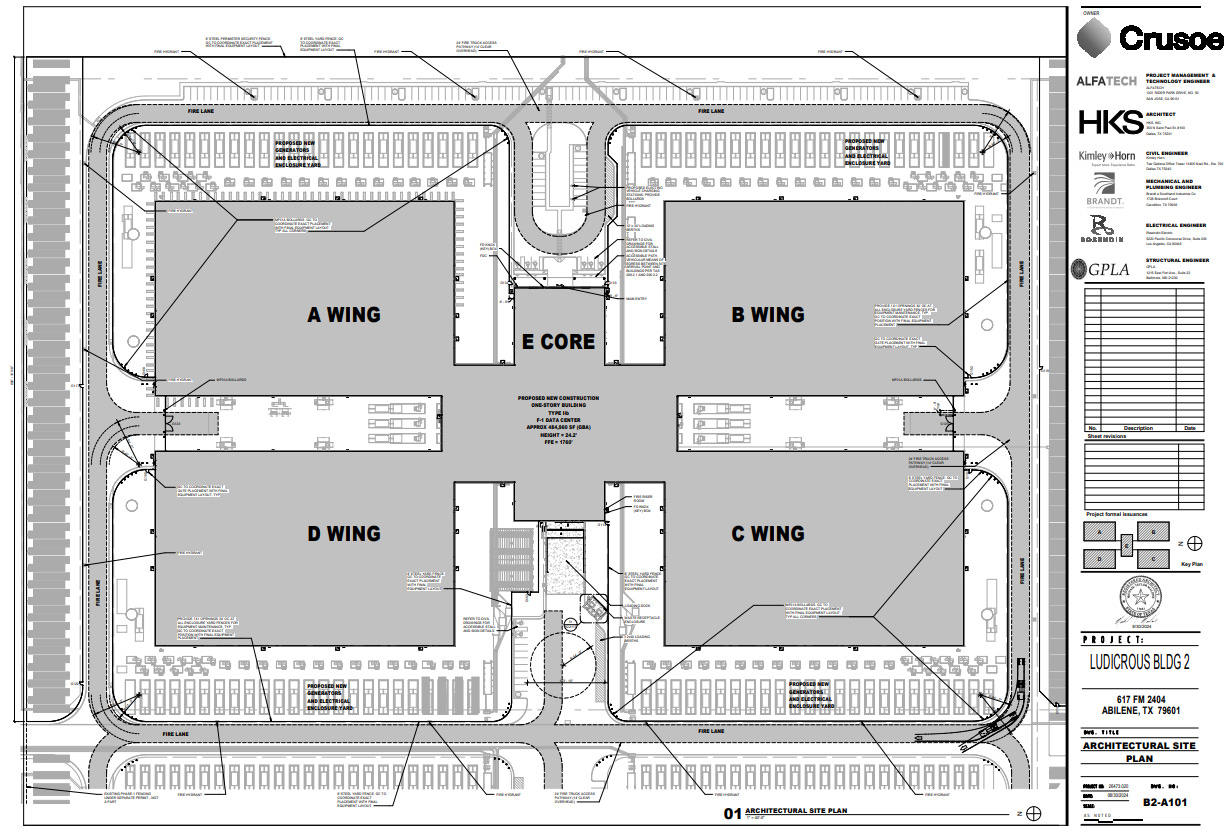

Each is literally a “Ludicrous Building.” And there are eight under construction on this single site. And this is just one of the Stargate data centers, there are many more. xAI’s Colossus 2, Meta’s Louisiana Hyperion, Microsoft’s Fairwater, Quantum Loophole, Nebius’ New Jersey DC, Amazon’s Pennsylvania Project, Google/Anthropic’s Project Rainier - just to name a few.

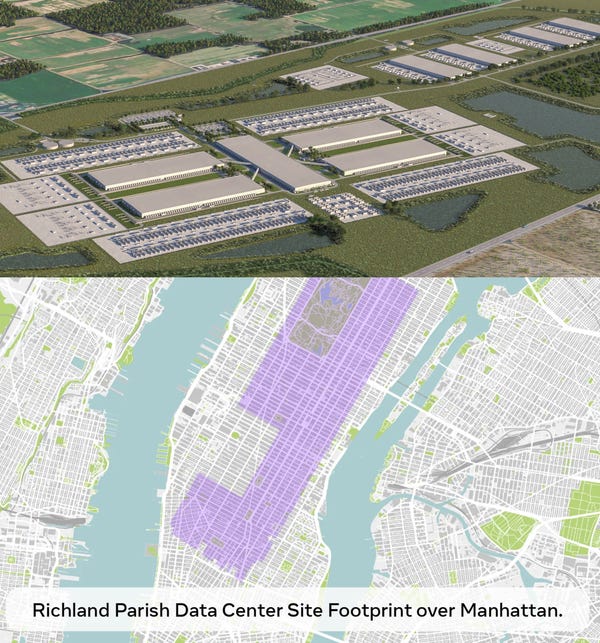

Together, the current footprint of this complex is roughly the size of lower Manhattan:

Which pales in comparison to Meta’s Louisiana facility, which would stretch from the top of central park down to SoHo:

Now our tagline for this article starts seeming a lot less like hyperbole and a lot more like an understatement. “What if Manhattan, but computers?”.

Don’t take our word for it. Really, don’t. Just in case you didn’t catch it the first time, I implore you to watch some of the footage:

You can see the currently completed data halls (one is currently being used for early training/inference runs by OpenAI), as well as the new construction that will massively expand the current capacity. The scale and speed of the buildout is truly immense, and – for me, at least – awe-inspiring. Don’t let anyone tell you the U.S. doesn’t build things anymore.

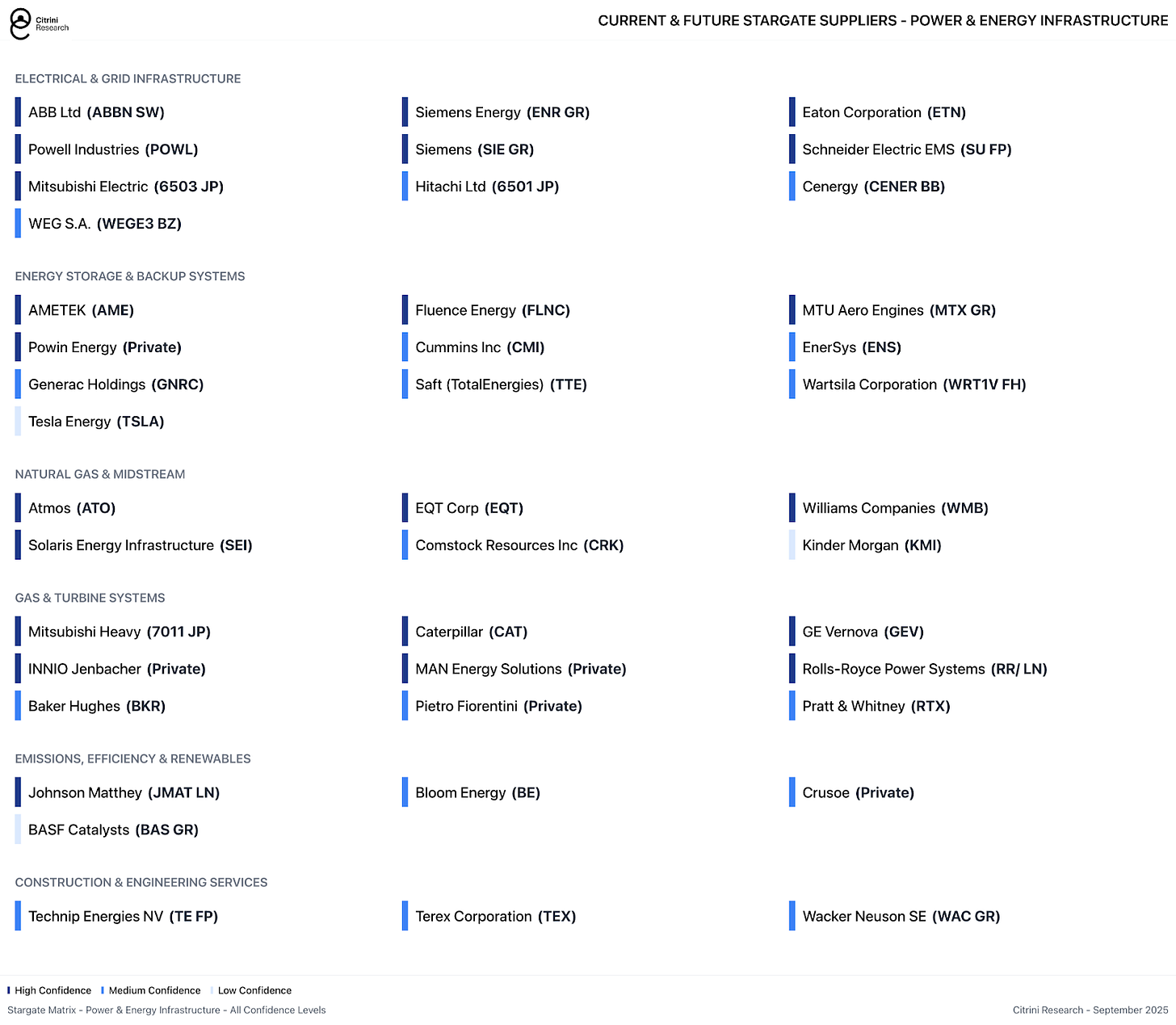

Despite the fact that we’ve owned or covered many of the companies involved in this (Vertiv (VRT), Eaton (ETN), Schneider (SU FP), ABB (ABBN SW), Siemens Energy (SIE GR), GE Vernova (GEV) etc). We’ve decided to take the weekend to drive home what the “data center buildout” really means from the outside, rather than the inside, of the data hall. We’ve spilled a lot of ink zoomed into the data center, focused on racks, high-bandwidth memory, pluggable optics, active electrical cables, racks, switches, GPUs, ethernet fabric. Now, we’re zooming out in a very literal sense.

This is the clearest demonstration yet of how quickly the AI infrastructure machine is spinning up, and how many industrial hands are turning the gears. The execution tells us more: a who’s who of global vendors rushing to bolt down turbines, transformers, chillers, tanks, and cables in the Texas plains – and they’re powering it all with natural gas, one of the only reliable and dispatchable fuels we have today.

These projects are so large that even multi-national corporations cannot fulfill the entirety of the needs, with multiple contractors and OEMs all working towards a singular goal: the largest industrial investment cycle for the United States since World War Two. This requires the builders to call on anywhere and everywhere to realize their ambitions.

In short, “tech” has become truly capital intensive again for the first time in a quarter century. For the broader economy, this is an enormous development. But a picture is worth a thousand words, so drone footage must be worth a thousand charts...

At the Stargate Data Center in Abilene, by mid-2026 eight buildings will be completed representing approximately 4 million square feet with a total power capacity of 1.2 gigawatts (GW). And this is just one site. Although we’ve decided just to focus on this one (somewhat arbitrarily, somewhat because the “Stargate” moniker was leading to doubts), the important thing to keep in mind is that there are going to be tens if not hundreds of similar projects built over the next five years.

Five new Stargate sites are already in the permitting or construction stages (Shackelford will have its first building complete in H2 26) and there’s already discussion about expanding the Abilene DC by another 600MW.

We already have published on the need for:

More compute in May 2023, in our article “Artificial Intelligence: Global Equity Beneficiaries”

More power in May 2024, in our article “The Utility of Bubbles: The Intersection of Fiscal & AI”

More connectivity in July 2024, in our article “Can You Hear Me Now? The Coming Optical Shortage”

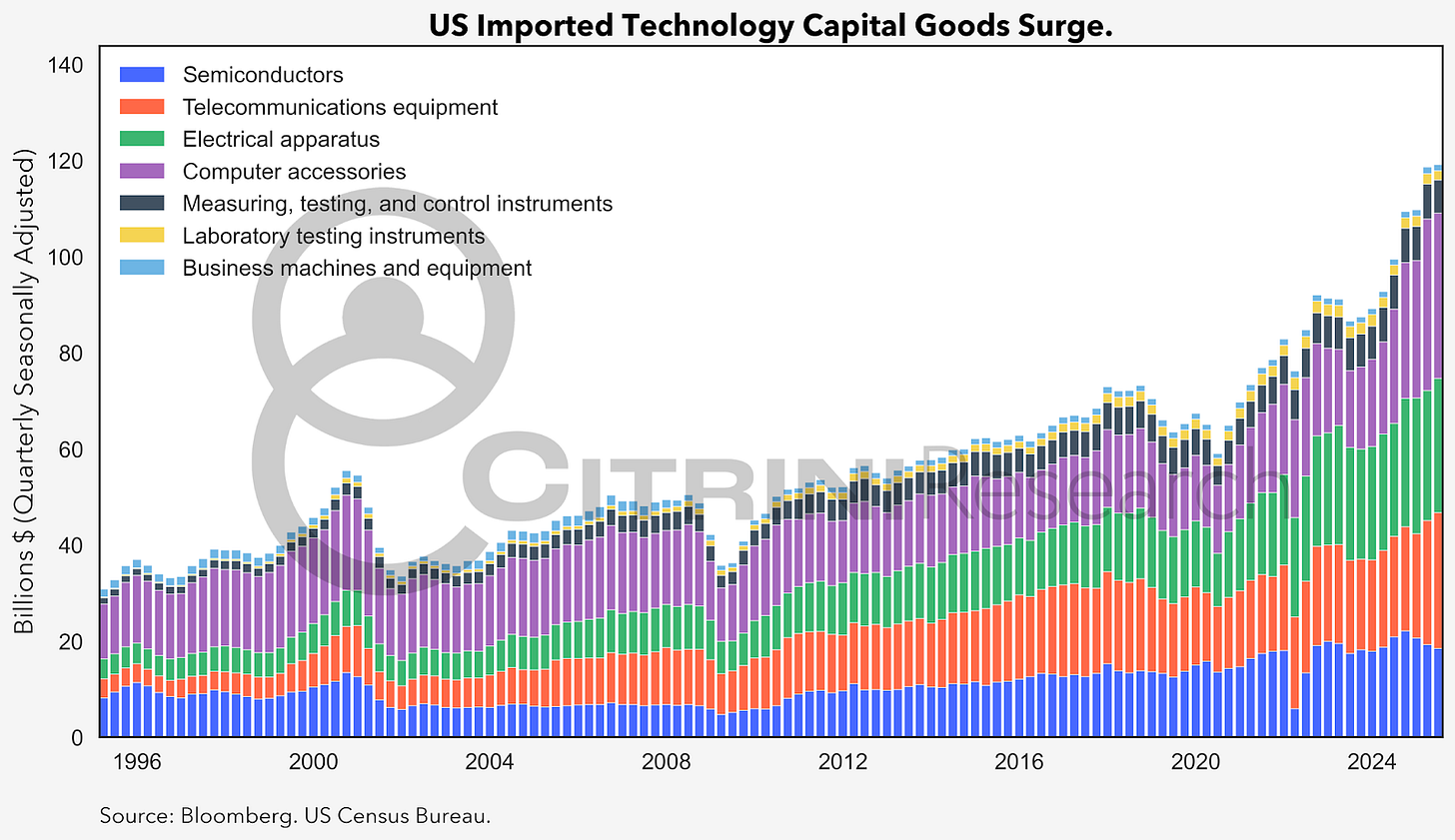

We have continually tracked what’s happening inside the data center. Which, to be clear, remains vitally important and is not slowing down:

“At completion, each data center building will be able to operate up to 100,000 GPUs on a single integrated network fabric, advancing the frontier of data center design and scale for AI training and inference workloads. The first phase includes two buildings optimized for both direct-to-chip liquid cooling and air cooling, ensuring flexibility and cutting-edge performance. Upon completion, the data center will support up to 100,000 GPUs on a single network infrastructure.”

Source: Crusoe/DPR Communications

But today, we’re doing two things. The first is appreciating that we were right back in 2023, the scale of the data center buildout is indeed “hyper”. Second, we are taking a closer look at who’s actually building the data centers and facilitating their immense needs. It’s a bit less exciting than who’s developing the hardware upon which the Machine God will run, but after viewing the scale of this project we believe it requires some additional attention. Who makes these massive facilities appear from the ground up, like an alchemical transmutation of money into power consumption and compute?

Because there’s a lot that needs to be done, and a lot of money to go around. Today, it’s Texas. Soon, it’ll be New Mexico, Ohio, Wisconsin…everywhere there’s enough energy and land to build them. Therefore, paying attention to the blueprint is a good idea.

Our takeaways from this footage can be summed up as “more power, more natural gas, more thermal management and more construction”.

Power & Energy Infrastructure

Power and Energy: The Natural Gas Backbone

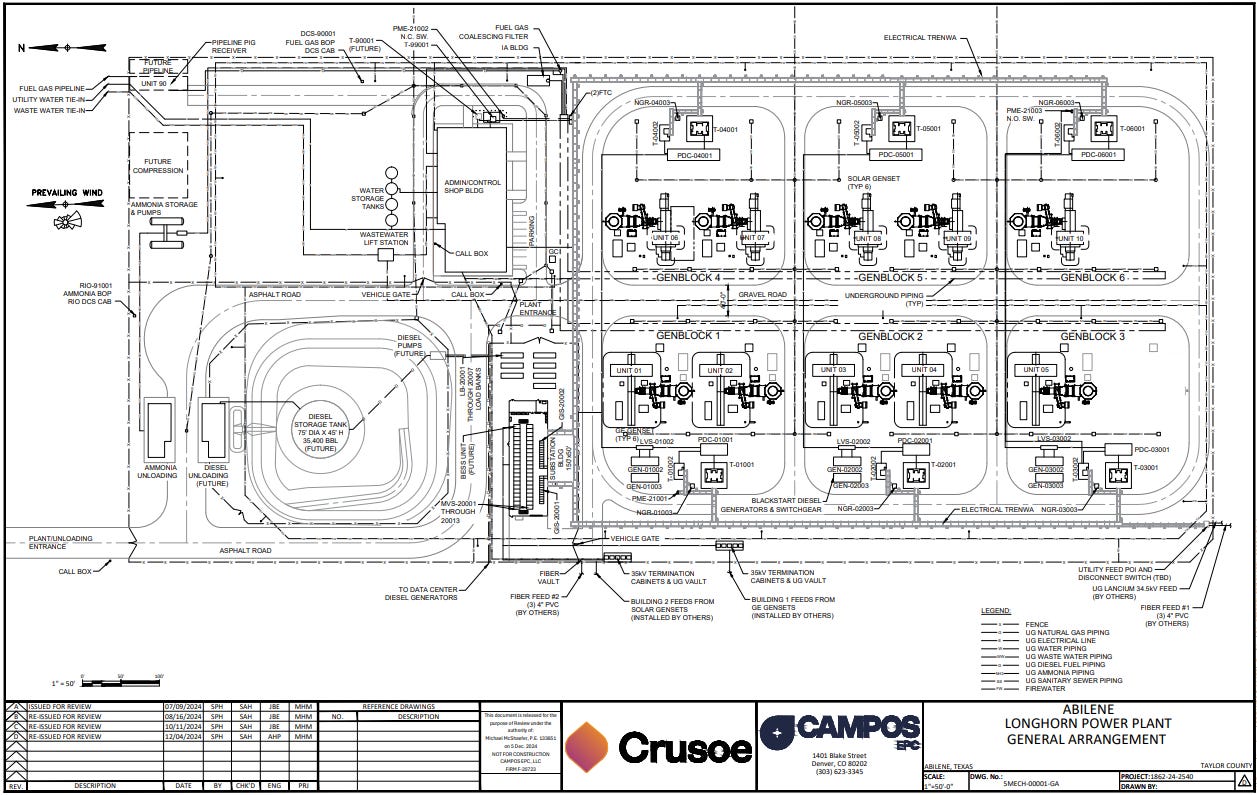

When Stargate submitted its final Federal Operating Permit back in January, it proposed a primary behind-the-meter power plant built around 10x natural gas simple-cycle turbines. Combined, these 5x 38MW Titan 350 units, and

5x 34MW GE LM2500 units are licensed to run 24/7 for a total continuous power output of 361 MW. Below we show the power plant’s 2D schematic.

Stargate is grid-connected, which is ideal, as a grid connection allows data centers to pull from a diverse portfolio of generation; but grid-connections are increasingly difficult to get as large-load requests skyrocket. These large loads risk destabilizing residential electricity access if not planned correctly.

The choice that hyperscalers must make is clear – do they want to make it to market quickly and reliably, or do they want to feel good about their carbon emissions. The answer is obviously to make it to market quickly and reliably; as such, data centers are leaning heavily on natural gas turbines to generate the electricity they need because it’s the only dispatchable power generation available. Reliability is far more important than anything else.

The CEO of Crusoe himself stated back in May that he does not believe any of the hyperscaler’s “net-zero by 2030” pledges will actually be met (in any way besides simply purchasing offsets).

While grid planners try to balance rapidly increasing load with generation, increasingly often, the lack of renewable generation has been the noted cause of rolling blackouts throughout Texas; so it’s no surprise that data centers are choosing to BYOB as far as power is concerned. The opportunity cost of downtime is just too great to rely on anything but natural gas.

The first and foremost insight we feel is evident from viewing this footage – we’re going to need to supply a lot more natural gas. Between the “other megaprojects” (massive LNG export capacity on the Gulf Coast) and the data center buildout, it’s beginning to look like the sole neglected area in the market involved in the effort of expanding US electricity generation by >20%.

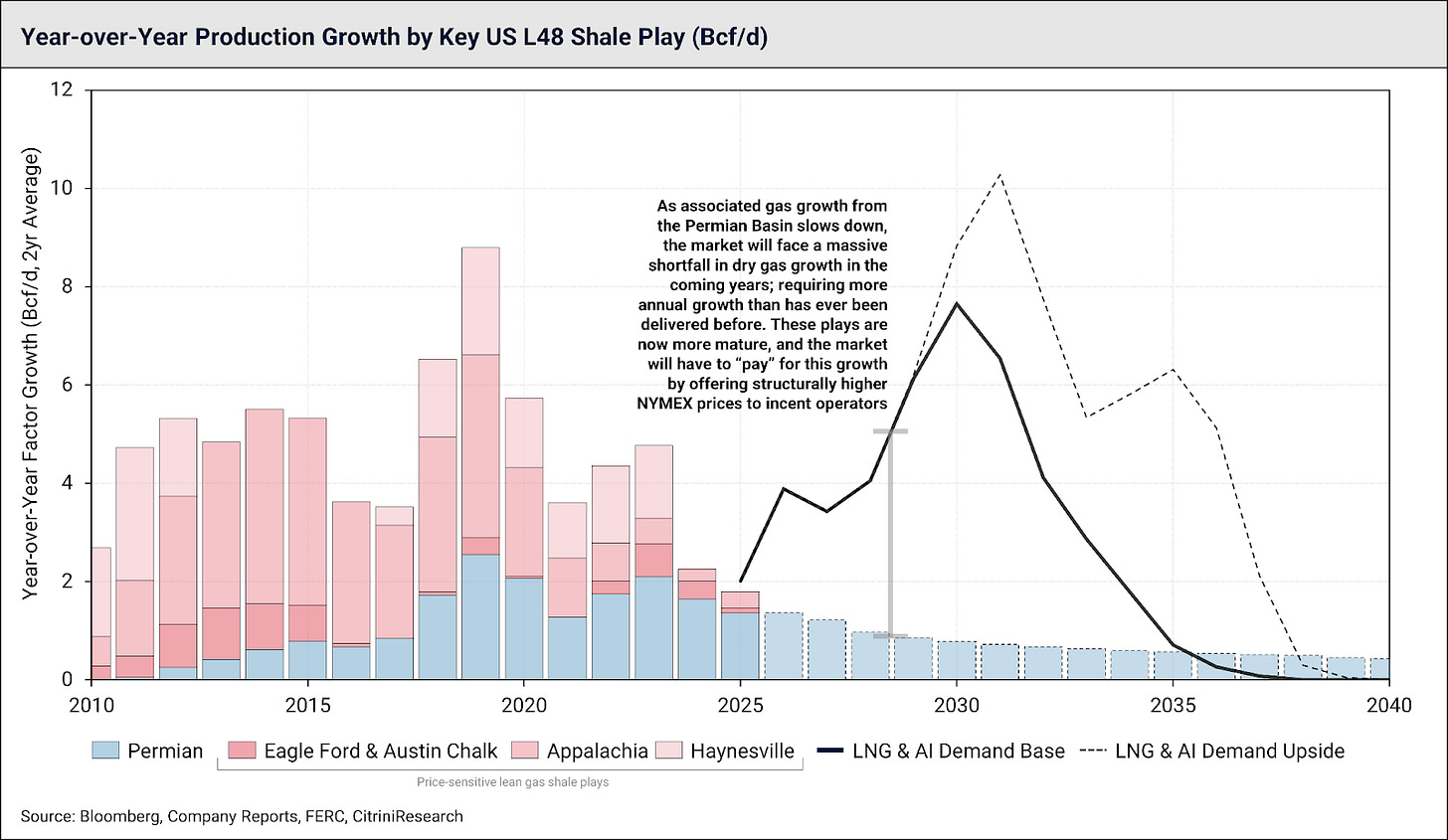

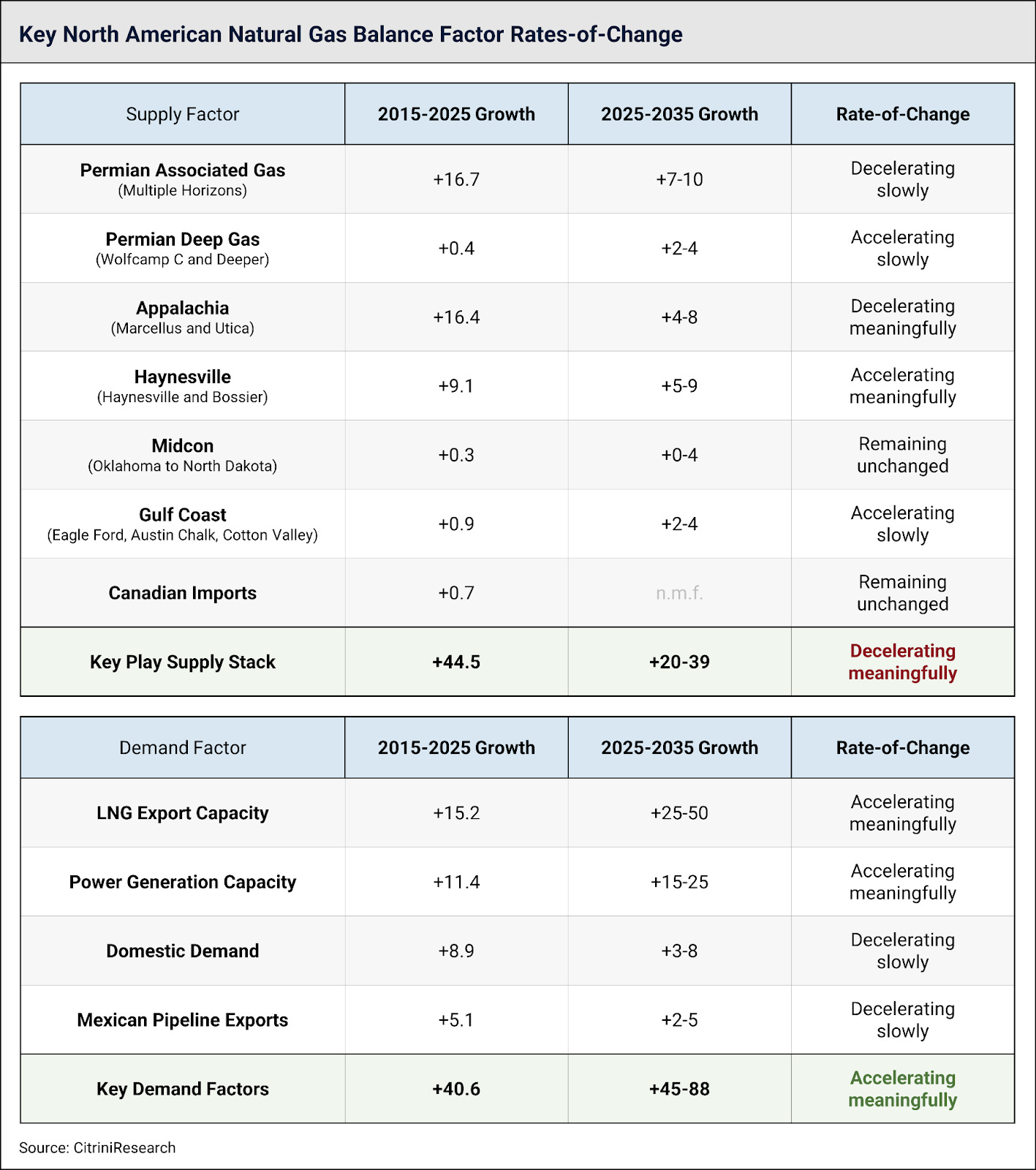

While latent growth from the Permian can answer some of that call, it’s becoming plain-as-day evident we’re going to hit a wall, and run out of cheap gas over the next few years. Below we show historical year-over-year growth from the key US gas plays, and forward demand projections from just LNG export facilities and data centers. We have built these forecasts using gas turbine delivery schedules, and FERC export filings. This gas demand forecast isn’t an estimate, it’s backed by committed infrastructure. What we’ve come to appreciate is that while the world expects the Permian to provide the gas growth to fuel all future projects – the Permian’s associated gas is dangerously “oversubscribed”, and the other dry gas plays will need to fill the gap with record-setting annual growth.

This puts the power back in the producers hands, and that growth isn’t going to come cheap. We have already learned that EQT US has been in negotiations to strike fixed-price supply agreements at ~$5/MMBtu, almost 70% higher than the current Henry Hub spot price, and ~100% higher than local benchmarks. This represents a radical change in supply dynamics – with hyperscalers willing to pay up for flow surety given the extreme importance of training uptime, and producers needing to be compensated for dispatching growth (for the first time in shale gas history).

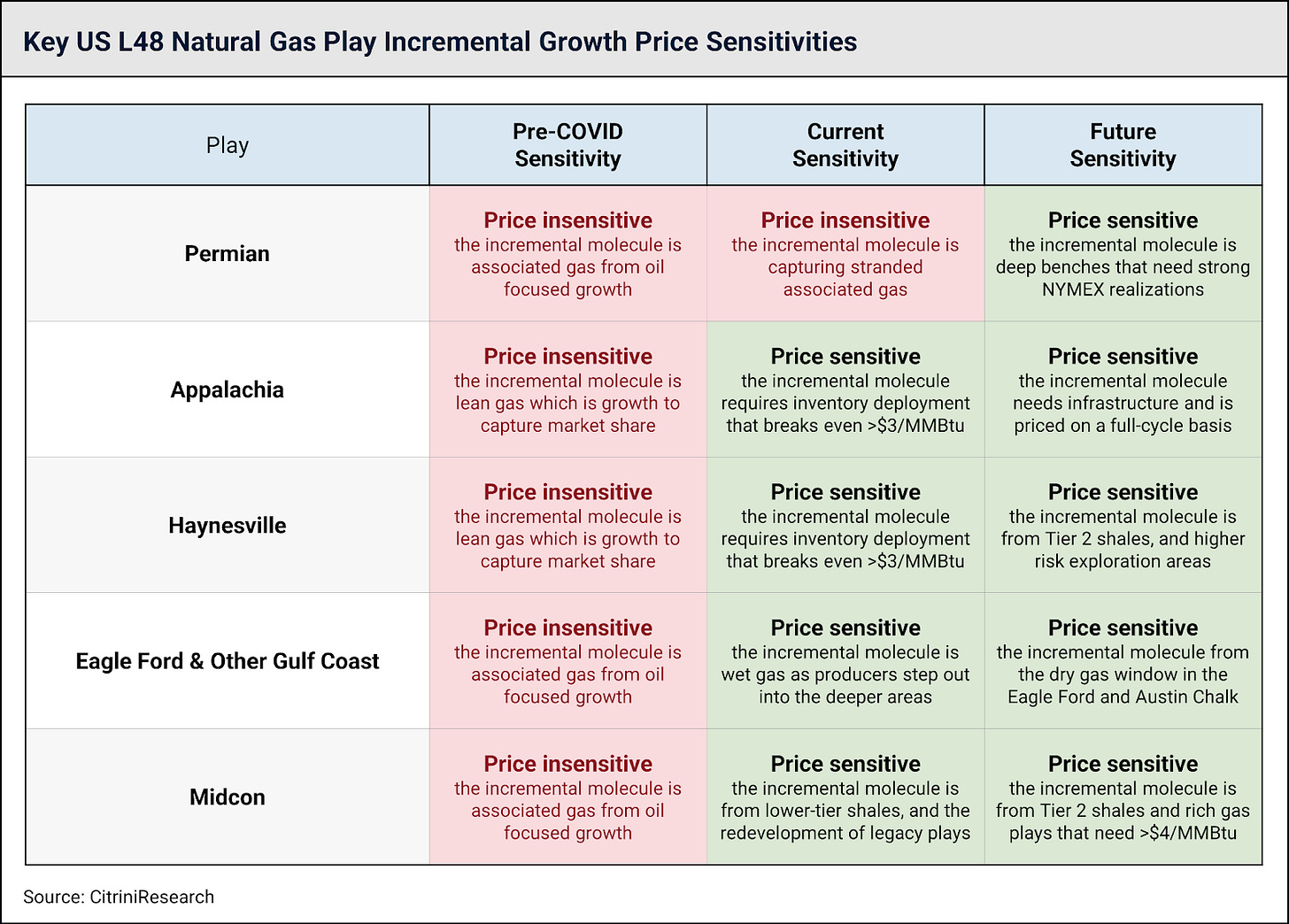

As passive growth from the Permian slows, the natural gas market dynamic shifts to a point where new molecules have to be incentivized to come onto the market. Below we show a table of price sensitivities by play, and through time. The Permian is the last bastion of “free” growth – and this is what the entire market is hanging on to. For context, there’s >30 billion cubic feet per day (Bcf/d) of gas demand lined up to capitalize on just ~10 Bcf/d of “free” Permian gas growth. For context, 30 Bcf/d is ~30% of current US production.

While preparing this piece, when we asked others where they thought US gas supply growth would come from – from almost everyone we heard, “the Permian will take care of it”. The Permian won’t take care of it.

The Permian Basin has grown gas output consistently at 1-2 Bcf/d every year over the past decade. It has totally and completely overwhelmed the gas market in every way, and many have taken that to mean that the Permian will obviously continue to provide an infinite stream of free growth. That is simply not the case, and data centers accelerate this reality.

This has led to a complete flip-flop in supply dynamics over the past decade. Supply led price from 2015-2025; demand must lead price ~2025 onwards, and new data center driven demand growth accelerates this imbalance.

The proposal of fixed-price contracting with EQT suggests that data-center buyers, which are clearly very price-inelastic consumers, appreciate this evolving dynamic. As more consumers compete for finite molecules, we see no outcomes where Henry Hub prices, and the long-dated forward strip won’t move structurally higher. It has to – the ‘free’ molecules of the last decade are running out, and this is a measurable fact.

Data centers are “baseload demand” in the truest sense, and completely price-insensitive. Those that have been burned by persistent US gas growth in the past may insist cheap gas growth will continue on forever, but we can’t see how that’s possible within the realms of physics and geology. Seasonality and storage aside, very simply; supply needs to move higher, or demand needs to move lower – only a change in pricing can catalyze either.

Looking at Stargate specifically, we see in the images below a utility-scale natural gas plant and substation dedicated to powering just a portion of this facility, which will likely be expanded much further in the future.

Today, the two dimensional renderings above have already transformed into massive physical structures representing new baseload power demand. We see 10 turbines that have been physically installed, and will be drawing natural gas later this fall.

We also notice some simple cycle 35MW turbines from Solar Turbines - a company owned by Caterpillar (CAT). This demonstrates exactly how resilient this aspect of the buildout is: Combined cycle gas turbines have a lead time of 5+ years, simple cycle gas turbines have a lead time of 12-18 months, nuclear is 10+ years away. It’s not hard to imagine why, then, Stargate Abilene is using simple cycle gas turbines from GEV and CAT. Just to be clear, these are significantly less efficient than larger, combined cycle turbines. But efficiency doesn’t matter when you are taking what you can get.

Is this alone enough to change the supply/demand equation for US natural gas? Not immediately, but in conjunction with the massive LNG export capacity being added – we think it’s likely natural gas prices will trend meaningfully higher (with the requisite, and increased spot price volatility) over the next 5-years, as the market flips from supply competition from every corner of the country depressing prices, to consumption competition from every corner of the country elevating prices.

The Stargate Abilene’s plant actually pales in comparison to xAI’s Memphis Data Center, which is using more than 30 gas turbines, a mix of GEV, CAT (Solar Turbine) and SEI – these are currently operational per thermal imaging (Source: Ars Technica).

Beyond these continuous operating base-load generators, the facility also includes 18 small scale (2-3MW) Caterpillar backup diesel generators.

The Stargate Abilene plant draws natural gas from the Atmos Gas Transmission system (ATO US) which moves associated gas from the Permian Basin east towards Dallas. The CEO of Crusoe, leading this project, has touched on the use of “flared gas”. Many believe that this associated gas (i.e. associated with oil production) that hyperscalers are pulling from the Permian is “free” – but that’s not the case. Associated gas is not “captured” so to speak, as in, it wasn’t being flared before. If you captured all the excess flared gas in the Permian, it would only be enough to power ~5% of the full ~5 GW Stargate complex.

While yes, associated gas is a ‘waste product’ where realizations are not impactful to oil-well economics; the entire US Gulf Coast LNG sector was built around the assumption they can have this associated gas for free. If data centers begin to siphon away associated gas supply; it’ll be detrimental for LNG exporters and utility buyers elsewhere. The gas market is a closed loop, and data centers will massively pull forward a natural gas bull market where dry gas producers have pricing power, as their molecules are truly needed to balance markets.

It’s becoming increasingly clear that diesel and natural gas power generation, both grid-connected and ‘behind the meter’ (which simply means the power generation is on-site, and has no interaction with the grid) will be the only way to power data centers at scale. There’s a reason there is yet to be one data center announced that actively employs solar panels on site as a primary source of energy. It’s because solar panels don’t provide the reliability that hyperscalers need. Notice that the only solar power announcements come in the form of “grid-connected offsets” and not on-site generation capacity (i.e. the data centers are actually drawing from reliable natural gas power, and ‘offsetting’ it with less reliable power). Adding more solar to grids will help, but it’s not inherently dispatchable power, which is what data centers need. Not matching baseload demand with baseload power will undisputedly cause unstable power grids in the future, similar to what many European countries face today. Adding more intermittent power, while helpful, isn’t the most optimal way to allocate capital across the grid.

The recipe for an unstable grid is matching near-permanent power consumption with non-permanent generation (i.e. solar, and wind). Data centers will endeavor to run 24-7-365, and needs to be matched with power that can controllably run 24-7-365. In the future that may be nuclear, but today, that’s natural gas. As retail power prices rise (even if data centers aren’t the reason at all, and the increase is still less than inflation), data centers will bear the social brunt of higher utility bills; as such, it’ll be important that well-planned grids begin to moderate what types of generation can connect into the grid to ensure reliability and affordability.

While pairing the marginal generator (which during the day is almost always solar) with batteries would seem to always be the most cost effective solution on a unit-basis; when considering the capital invested in transmission infrastructure with achieving that grid reliability – solar and batteries are just an expensive way to secure the operational flexibility (and surety) that natural gas transmission and generation systems gets you. While the unit economics of renewables are appealing – they don’t stand up when considered in tandem with grid reliability, which is bound to become a social and political issue in the future if not carefully planned.

Putting it in simple terms – data centers are almost guaranteed to run all the time, solar tries to run all the time but it’s impossible to guarantee that it will in practice. Natural gas on the other hand, can almost guarantee it’ll run all the time. Very simply, adding guaranteed power draw, but not guaranteed generation puts power grids at risk. The $/KWh math, in practice, doesn’t really matter if consumers face elevated blackout/brownout occurrences. The only way to avoid that is by favoring dispatchable natural gas power generation to pair with new data center power consumption. As such, we hold a very positive outlook on the trajectory of natural gas demand being accelerated by the massive data center build-out underway.

Do you want some hints that we’re right? In 2024, Meta added to their board one of the most legendary gas traders in history – John Arnold. Since then, Meta has agreed to fund >2 GW of new natural gas fired power generation capacity in Louisiana, along with setting up a new power trading division (FERC filing for Atem Energy, which is Meta spelled backwards available here). Their new Hyperion data center campus is situated at the crossroads of 4 major long-haul transmission pipelines, ensuring gas-flow-surety. Meta thinks markets are about to get more volatile (both natural gas, and power), and we do too.

The infrastructure to ensure that fuel is delivered, that demand is met, that the backup diesel generators do their job - it’s all going to be meaningful when there are trillions of dollars being spent. Those CAT diesel gensets for each building require dedicated above-ground storage tanks (ASTs), double-walled and often encased for environmental compliance. Companies like Matrix Service (MTRX), Chart Industries (GTLS) & Wärtsilä (WRT1V FH) specialize in fabricating and integrating these tanks at industrial scale. From there, buried fuel mains and transfer pumps tie into day tanks near each generator, with automated controls to cycle and polish fuel to prevent degradation. Even a few hours of stale diesel can create catastrophic downtime when the transfer switch calls for power. Beyond diesel, the natural gas plant itself requires a parallel lattice of fuel delivery infrastructure: transmission taps, high-pressure regulators, and metering skids. That’s the quiet domain of midstream contractors and OEMs like Kinder Morgan (KMI), Atmos Energy (ATO), Williams Companies (WMB).

Power and Energy: The Transmission

We see high voltage transmission lines, steel lattice transmission towers, switching and transformation equipment, power transformers, busbar systems, lightning arresters and multiple bays for redundancy. Currently, Abilene has a 200MW, 138kV substation that is being expanded by 1GW + 345kV substation with five main power transformers (for a total of 1.2GW).

Tier 1 suppliers include ABB, Siemens, GE and Schneider Electric. But if you’ve viewed these videos, considered the fact that there’s roughly another trillion dollars worth of sovereign and private investment in the pipeline for near-identical projects, and realized what that scale entails, you also realize that the Tier 1 designation isn’t that meaningful. The sheer capacity required here means that it’s likely, eventually, lead times get so long at the Tier 1 companies that the tier 2 and 3 companies also begin seeing orders. There are only so many companies that can supply what’s necessary.

For example, Powell Industries (POWL) specializes in custom PCR (Power Control Room) buildings that house the switchgear visible in our imaging. They are one of the few companies that can deliver integrated electrical houses at the scale that Stargate requires. The stock might be up a lot, but their 3-year backlog is probably going to become a lot longer. Any companies with existing inventory or manufacturing slots will see huge price premiums.

We can also see the transmission lines running north-south along the eastern perimeter and a new, expanded substation being constructed:

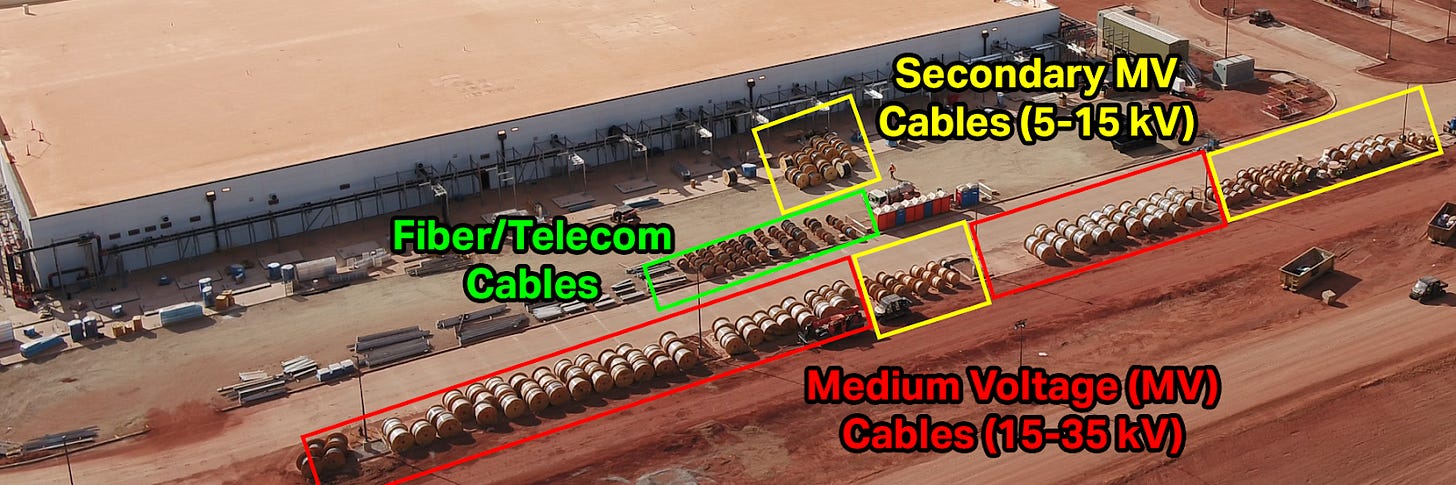

This multiple substation layout will require significant (millions of feet) of medium-voltage cable to distribute power throughout the campus. Prysmian, who acquired Encore Wire back in 2023, has already signed on with a $118M contract to supply this cable.

Data centers of this size demand “2N” redundancy, meaning every watt that flows through the system has a twin path. Each of the Abilene halls will house UPS (Uninterruptible Power Supply) modules measured not in kilowatts, but in tens of megawatts, paired with lithium-ion or advanced lead-acid strings, copper busways, and automated transfer switches.

Lead times for large-scale UPS systems have stretched to 18–24 months, as hyperscaler orders soak up factory slots. That demand spills down into the supply chains of battery vendors, switchgear makers, and busbar manufacturers. It also means even Tier 2 players, Mitsubishi Electric for UPS, Saft (TotalEnergies) for battery systems and the others mentioned on our sheet, are starting to see orders they wouldn’t have touched five years ago.

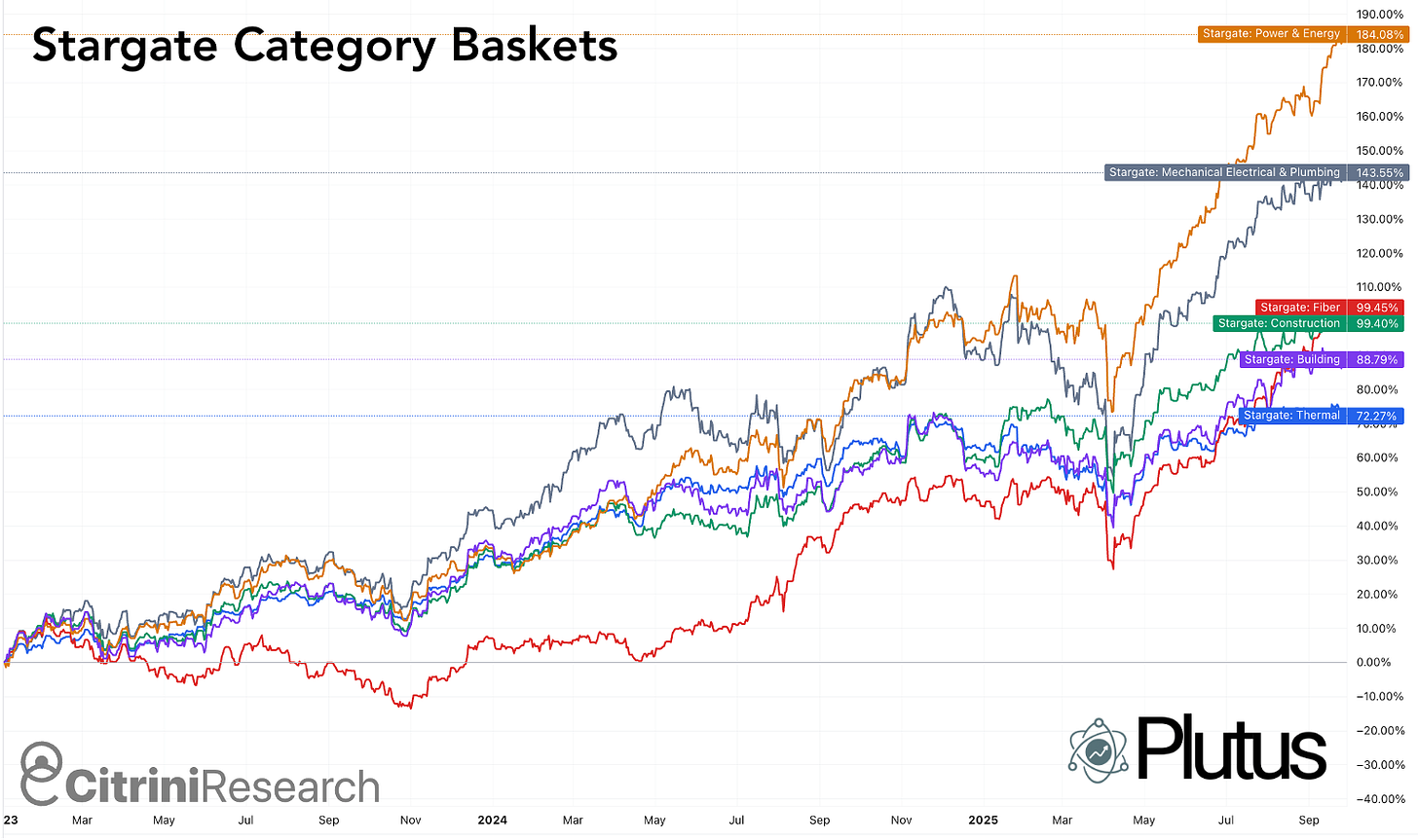

You can view the full basket, along with weights and performance, by clicking here.

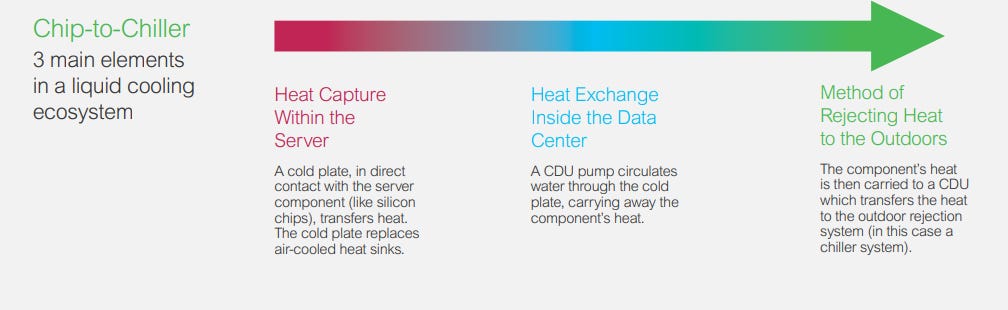

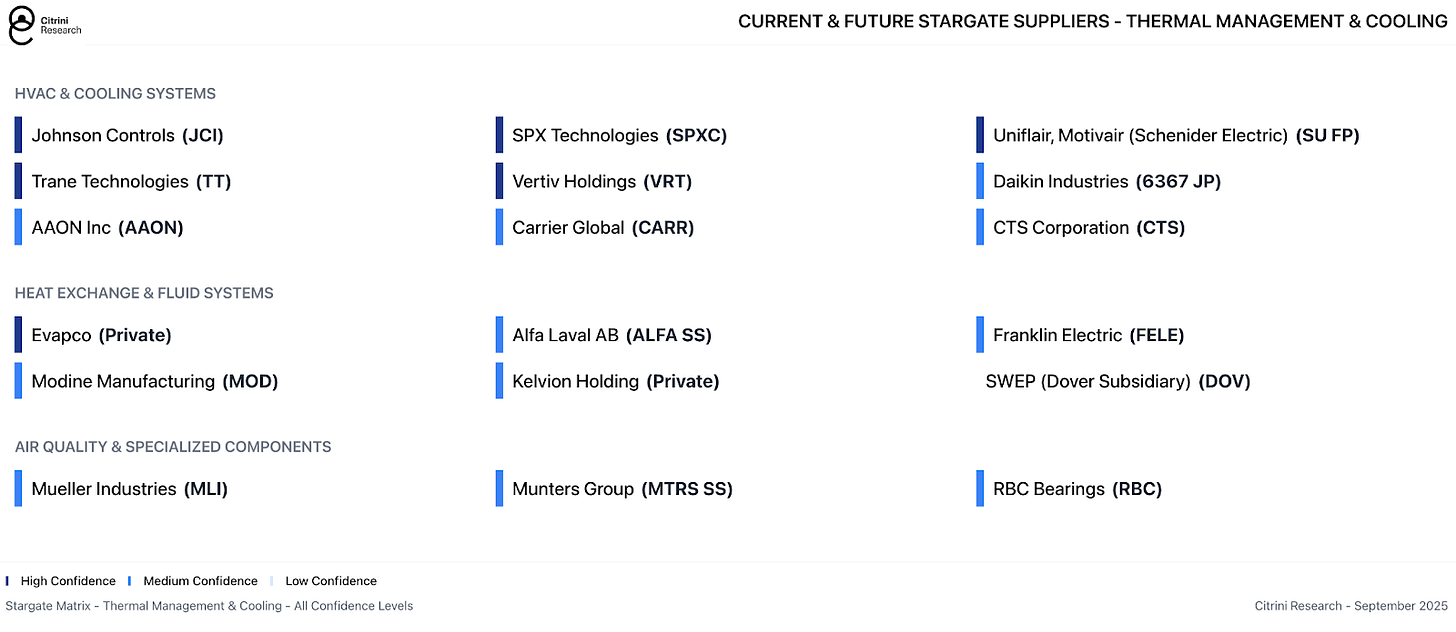

Thermal Management & Cooling

In the images and videos, we see extensive evidence of exactly what it takes to cool the Machine God’s infrastructure: cooling towers/dry coolers, liquid cooling manifolds, pumps, heat exchangers, CRAH/CRAC, water treatment.

We see long arrays of rectangular modules with axial fans on top and two large supply/return headers feeding individual drops along the bank. These reject heat from a warm-water (glycol) D2C loop to ambient air. What’s implied but not visible from the exterior are the CDUs (Cooling Distribution Units), manifolds, and at-rack cooling. Likely supplied by Vertiv/Liebert and/or Schneider Electric. Companies like Modine (Airedale, MOD), Munters (MTRS SS), Trane (TT) and Carrier (CARR) are trying to get in on this space as well, but Vertiv (VRT) remains the clear leader with Schneider Electric (SU FP) a somewhat distant second.

We also see the cooling towers, chillers, tanks and radiators. Companies like SPX Technologies (SPXC), Daikin (6371 JP), SWEP (DOV) are potential suppliers, among many others - see our spreadsheet for more detail.

Each building structure is flanked by 39 cooling towers on each side. With eight buildings currently under construction at the site, that equates to 624 enormous HVAC racks, each one dwarfing the size of a school bus. Below we see more of the underlying equipment under these gargantuan cooling arrays currently under construction.

Looking at images from roughly 4 months ago, we can see the trench work for water/glycol distribution to and from the adiabatic/dry fluid cooler D2C loop we see installed today.

Source: Schneider Electric

The heat rejection farm is extensive and massive, and each structure requires them.

Even in a closed-loop design, thousands of gallons of process water must be conditioned, filtered, and chemically balanced to prevent scale, corrosion, and microbial growth. Companies like Evoqua (XYL), Pentair (PNR), and Veolia (VIE FP) provide these treatment skids, resin beds, and dosing systems. Pumps and manifolds may move megawatts of heat, but without reliable filtration the entire system gums up.

A life lesson applies here: “there is no such thing as a free lunch”. When we wrote our piece “High Quality H2O”, we went into detail on the water requirements for high performance computing (and how they might be reduced / made more efficient). One of those ways is closed-loop liquid cooling. But nothing in life is free – rather, everything requires a tradeoff. Yes, liquid cooling reduces the water demand associated with air/evaporative cooling of data centers. It also requires significantly more power.

Again, even when it seems unrelated, nearly everything comes back to the simple equation that this is the magnum opus of turning money into power consumption in pursuit of creating the Machine God we’ve been promised. And, if it turns out that Machine God is a false deity, well…we are still going to build. The music is playing and nobody is looking for a chair - that is, unless the chair goes into a data center.

Who Supplies What:

See the Thermal Management & Cooling basket here.

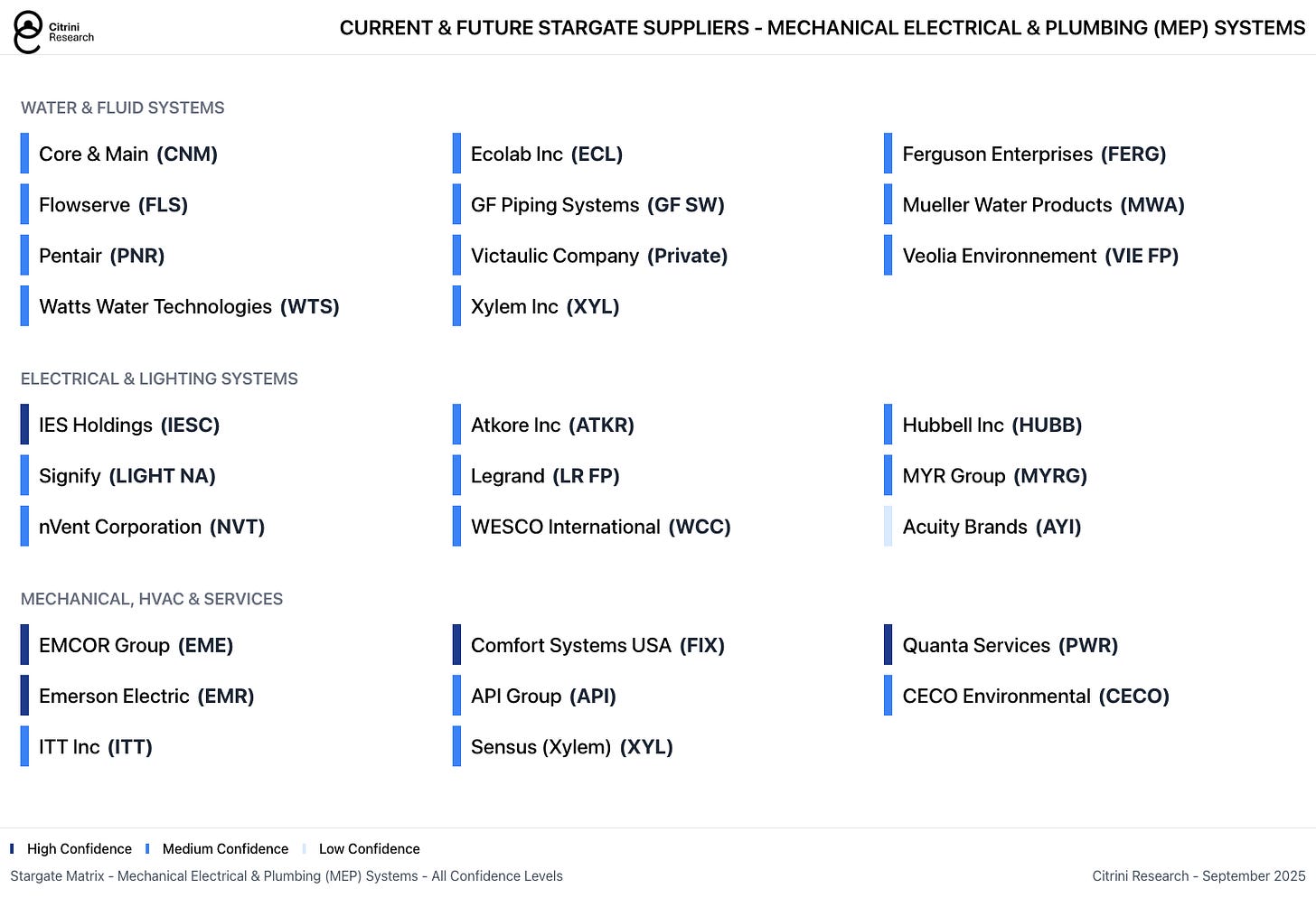

Mechanical, Electrical & Plumbing (MEP) Systems

Building facilities at such extraordinary scale requires literally hundreds of miles of supporting infrastructure both to enable the high-value systems inside, ensure safety and fire suppression, and simpler systems like civilian plumbing allow such massive civil works to function. These are things like ductwork, piping, valves, fire suppression, compressed air, water & sanitary, fueling systems.

While many of these components are less obvious from the external view, we can simply recognize the sheer size — space that is optimized to house equipment in the most efficient possible layout. Each major piece of electrical or HVAC equipment also necessitates ducts, electrical raceways, and piping. Of course, these campuses also require civilian infrastructure like plumbing, drainage trenches, and fire hydrants.

Outside, we see what are likely air treatment units or transformers running along the north-south side (implying 768 units across the entire project).

Below, we see rows of large wooden reels hold heavy-gauge power and data cabling, or fiber bundles for internal/external connectivity, staged for pulling into conduits, trays, and underground ducts that will connect substations, chillers, generators, and building systems. Some smaller-diameter spools may be destined for fire suppression mains (hydrants, sprinklers) or potable/process water. Further, we see stacks of large-diameter pipes for mechanical systems. They connect the cooling towers/dry coolers to the facility’s internal HVAC loops.

Stargate will likely rely on a range of MEP providers including the ubiquitous large-cap names with broad construction exposure throughout the economy. But even for diversified construction companies, these represent massive assignments and material revenue opportunities, which are only beginning in earnest as these mega-projects begin to break ground.

Who Supplies What:

See the Mechanical, Electrical and Plumbing basket here.

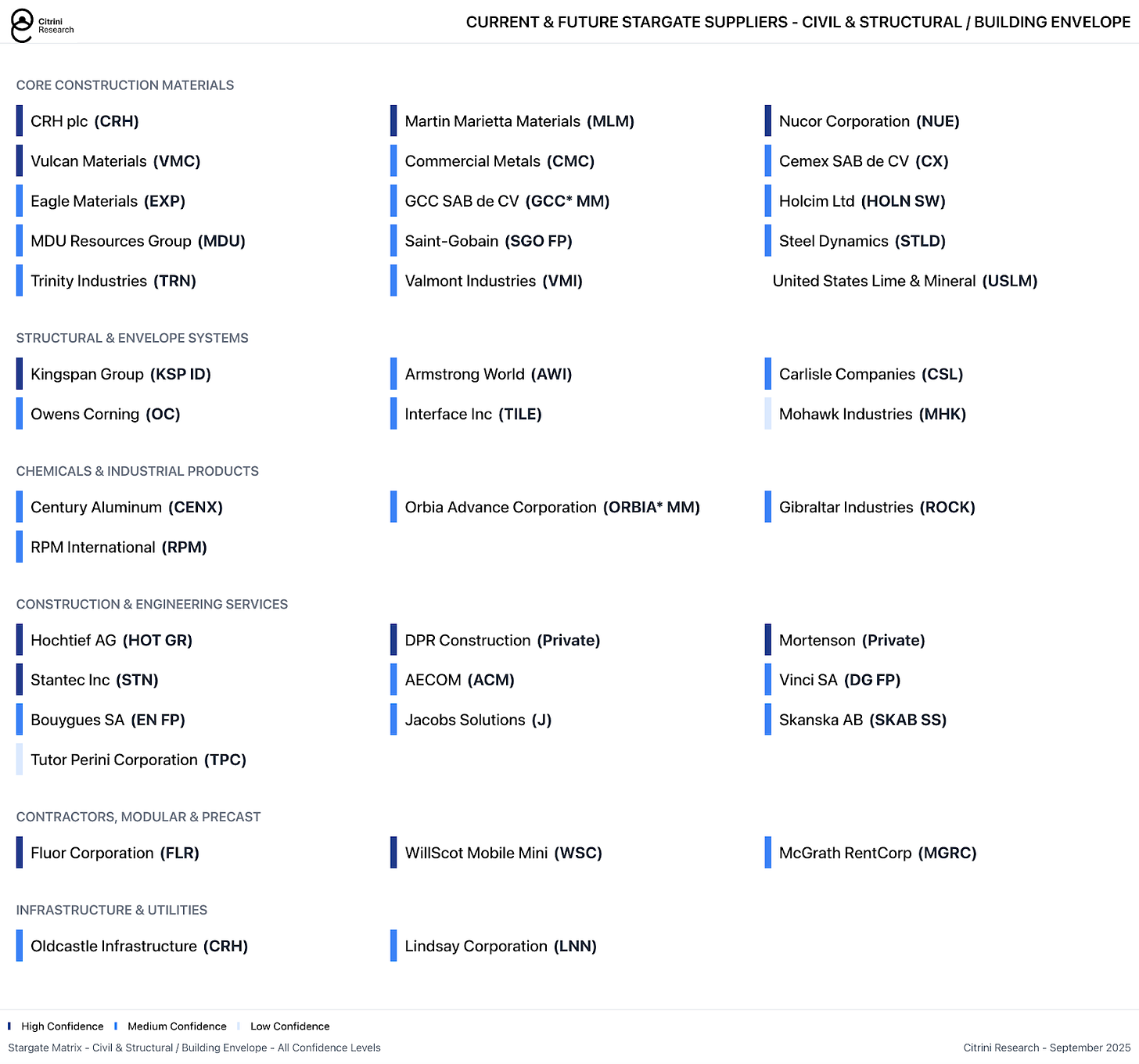

Civil & Structural / Building Envelope

In this (outdated) overhead image we can effectively see a timelapse of construction in the progress of buildings from left to right. Beginning with basic site preparation and base layer foundations, to initial scaffolding and support, to roofing and eventually full envelope.

Surrounding the physical structures are civil works including roads, drainage trenches, firefighting egress, hydrants, parking, and security facilities.

Now take a look at the progress that’s being made:

Foundations, insulation, tilt-up panels, steel, roofing, exterior cladding/IMPs, doors & security vestibules, paving, fencing. This might be the least sexy part of it, but the fact remains that it’s integral. Literally. Building integrity is extremely important when each hall contains the equipment equivalent to the GDP of Albania.

So while we may not see turbine-esque backlogs for the roofing, there are going to be a lot of contracts for mission critical building infrastructure. And mission critical pretty much means standard isn’t good enough – take the paneling:

These aren’t standard warehouse panels. Data center IMPs require R-values of 25-40+ for extreme thermal efficiency, vapor barriers to prevent condensation in high-heat environments, fire ratings meeting FM Global standards, acoustic dampening properties and 42-inch to 48-inch widths for rapid installation. Each new major project will utilize 5-10 million square feet of insulated metal panels. This logic extends to pretty much everything in the data center.

Who Supplies What:

See the Building basket here.

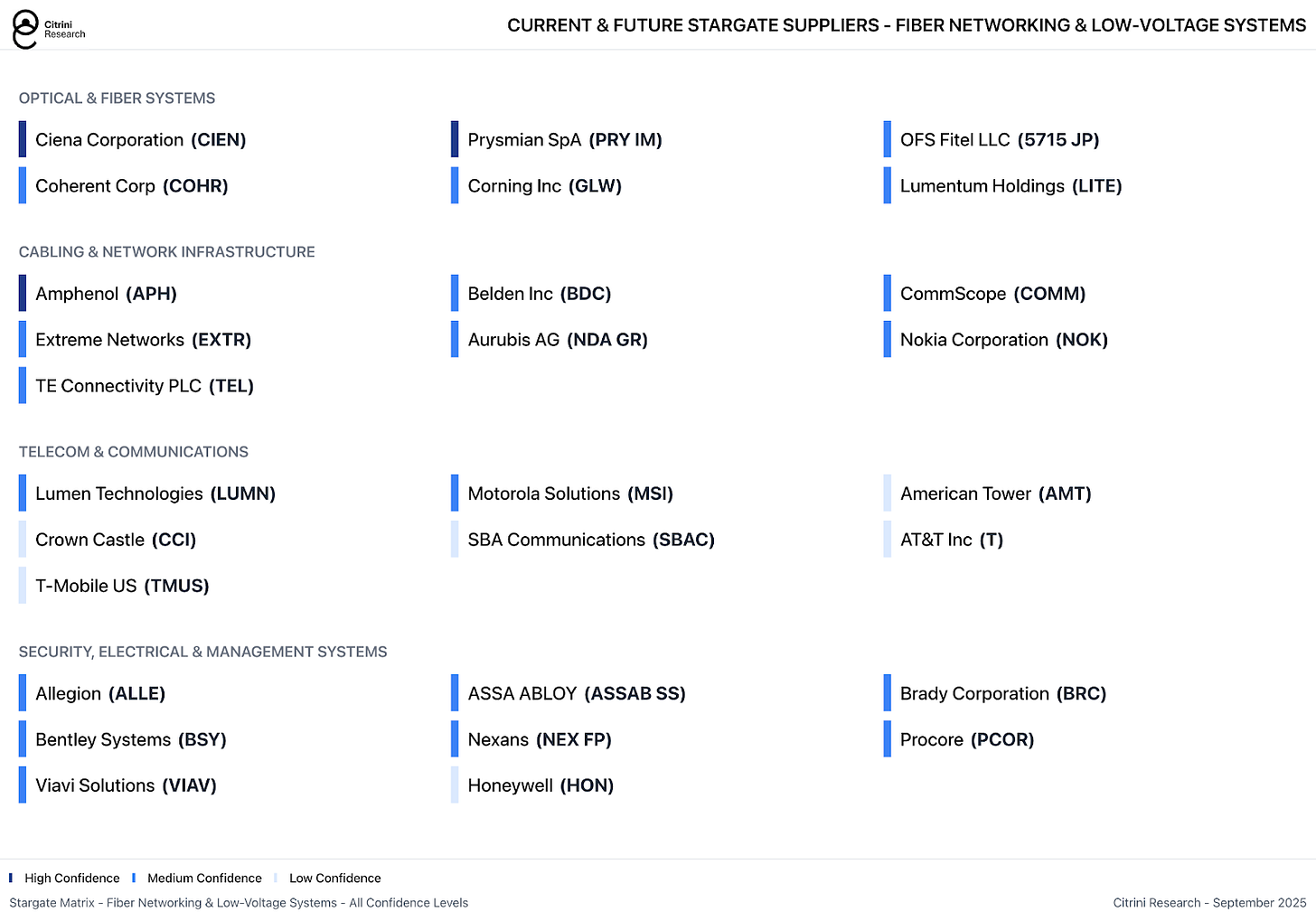

Fiber, Networking & Medium/Low-Voltage Systems

Perhaps the most cookie-cutter aspect of Abilene’s first phase is destined to be the networking infrastructure designed to enable high-speed communications between rows of servers and massive IT halls. Within those halls we can assume that Arista (ANET) will dominate the local fabric backbone with top-of-rack switches and routers while Nvidia’s NVLink acts as the blood cells carrying information from row-to-row for training runs and between GPUs for revenue-generating inference workloads. We’ve discussed the winners here extensively over the last 2+ years, so instead we turn to the real challenge: scaling coherent logical fabrics across data center campuses.

The magic of Stargate really comes into play when we begin to expand beyond this flagship 1,000-acre complex. With six sites already announced (over five confirmed locations and one TBA), the promise of Stargate comes from the ability to operate these campuses as a single data center.

The key players that will be entrusted to close the data loop spanning most of the contiguous United States should also be familiar at this point: 400G/800G Ciena (CIEN) modules containing laser components from Lumentum (LITE) and Coherent (COHR) used to light-up miles of Prysmian (PRY IM), Commscope (COMM), Corning (GLW) and/or Belden (BDC) cable, likely installed by Dycom (DY) or a close competitor. The playbook is certainly familiar, but the scale at which it will need to be executed really is reminiscent of a moonshot or a digital Manhattan Project.

It has not been announced - nor probably fully determined - how exactly these sites will be connected together, but we can safely assume that it will be a blend of private projects, partnerships with ISP/Telcos like AT&T or Lumen for new, shared connections, and more traditional peering arrangements that will see Stargate data loads routed through existing networks like Crown Castle’s fiber assets (soon to be owned by EQT’s Zayo) or Verizon’s rapidly expanding backbone.

Who Supplies What:

See the Fiber basket here.

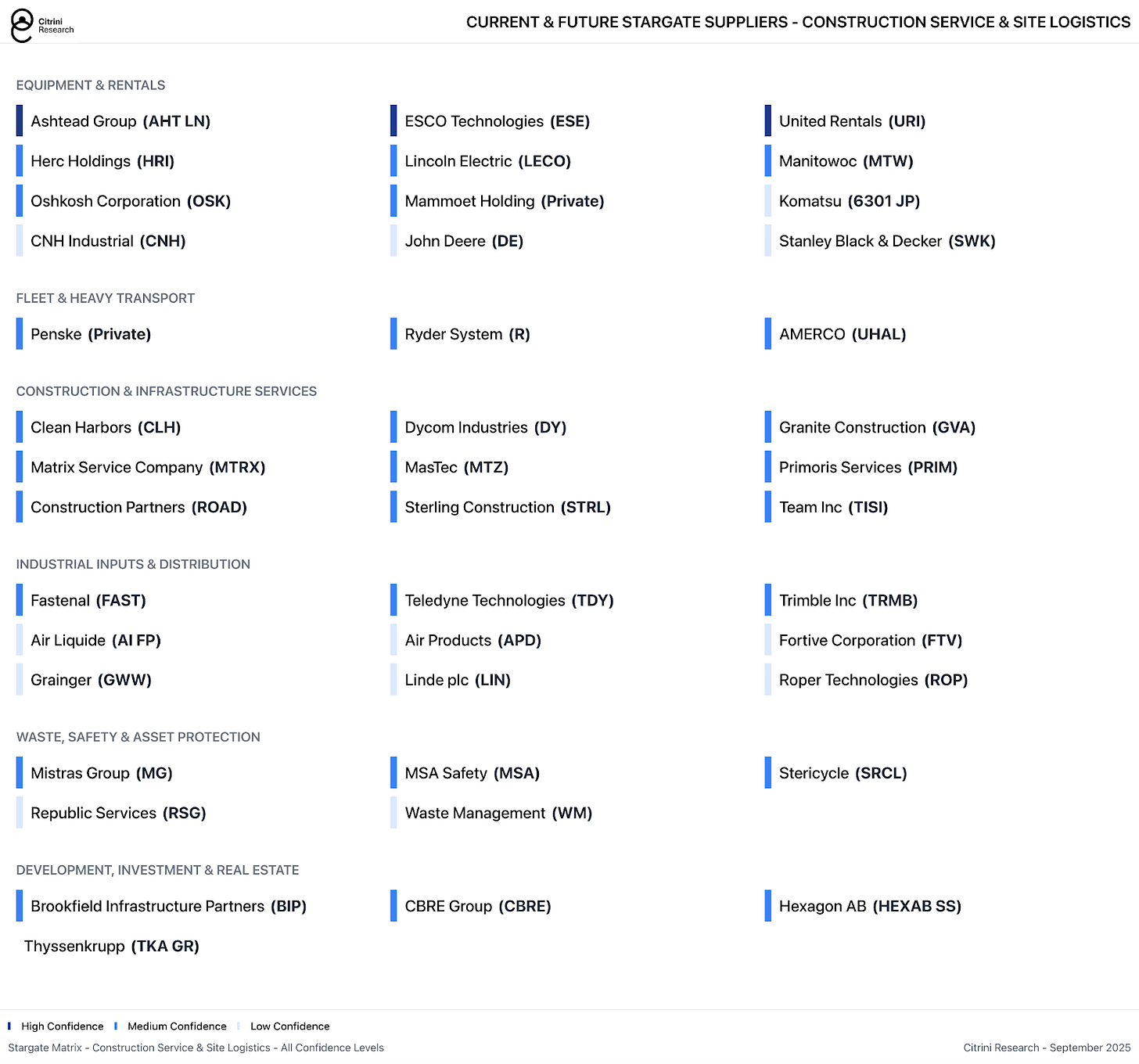

Construction Services & Site Logistics

Now we are really getting to the least-exciting but most-necessary part of all this. Before the data flows, the coffee has to. While it can be easy to think of these projects as simply appearing out of thin air, the footage drives home the scale of what’s necessary. Electricians, contractors, plumbers, the architects and EPC companies that tie it all together, heavy equipment, rentals, modular offices, testing/commissioning etc. It’s a bit of a throwback to our thesis on vocational schools like Universal Technical Institute (UTI), we are simply going to need more tradespeople.

Heavy equipment is omnipresent, unsurprisingly. Caterpillar, Komatsu (6031 JP), and Deere (DE) machines clear, grade, and trench; United Rentals (URI), Ashtead (AHT LN), Herc Holdings (HRI) and similar providers supply the fleets of lifts, welders, and generators that make the site look like a pop-up city. Prefabricated offices and testing labs are craned in by WillScot (WSC) and similar providers. Tens of thousands of deliveries, from transformers, steel panels and spools of cable to gas turbines, piping and server racks, have to hit precise time slots to avoid gridlock. Specialized contracts, logistics integrators and EPC. While many are private, like Crusoe and DPR, other large players like Primoris (PRIM), which is involved in this project), Quanta Services (PWR), Emecor (EME), and Aecom (ACM) are public.

And once things are put in place, commissioning and inspection groups such as Acuren (TIC), the subject of our latest thesis, and Mistras (MG) then step in to validate welds, pressure-test piping, and certify systems before they ever carry current. Without their sign-off, a turbine is just sculpture. All told, the construction and logistics layer is the great equalizer: it doesn’t matter how many GPUs NVIDIA can ship if nobody can pour the foundations, weld the beams, or stage the tanks on time.

See the Construction Services basket here.

Our Main Takeaways

While we’ve been aware of most of this, and have certainly benefitted from the scale, it really is another thing entirely to view it firsthand. We’ve added names like EQT to our portfolio and will be looking to add others related to natural gas and gas infrastructure, as it’s painfully obvious that gas is the only way we are getting the compute we need now - regardless of what nuclear does by 2030.

Beyond that, we’ll continue monitoring these names for inclusion in our Fiscal and AI baskets. Many of these names have extremely diverse exposure, but it will become increasingly important to track which end up deriving an increasing share from data center construction – we really cannot emphasize enough the scale of what’s happening. It’s likely that the power/infrastructure part of the AI trade continues for as long as these massive monuments to compute continue being erected and funded. There are simply some companies without which you cannot conceivably accomplish these goals, and whether or not we are overbuilding capacity or not… it’s going to get built. Our spreadsheet contains our best effort at flagging all the companies that are or should benefit from this massive effort, but we’re sure there will be some we haven’t captured.

We’ve uploaded each category as a basket to Plutus - each linked below - for ease of tracking, and we’ll keep these updated as we discover anything new.

All Basket Links:

Stargate Mechanical Electrical & Plumbing