Preface

As discussed in my piece on how to play AI beneficiaries (which you should read first here), I laid out the case that the best risk reward in my triphasic model came in the form of data centers – being long the picks and shovels / bottleneck in the AI hyperscale – so that the uncertainty of how the paradigm might play out would be reduced. In other words, you should be long the companies who’s benefit is nearly inevitable and who’s risk is minimal (Nvidia is, of course, the quintessential example).

However, I have gotten some feedback from readers who are interested in tempting fate by trying to pick the ultimate winners in AI Software and Services. The killer app / AI assistant / company of the future, the companies who derive gain from the apex of the second & third phases directly (Democratization of AI/ML and Specialization/Integration of Models and Tools, respectively) rather than being the enablers and implementers that allow AI to proliferate.

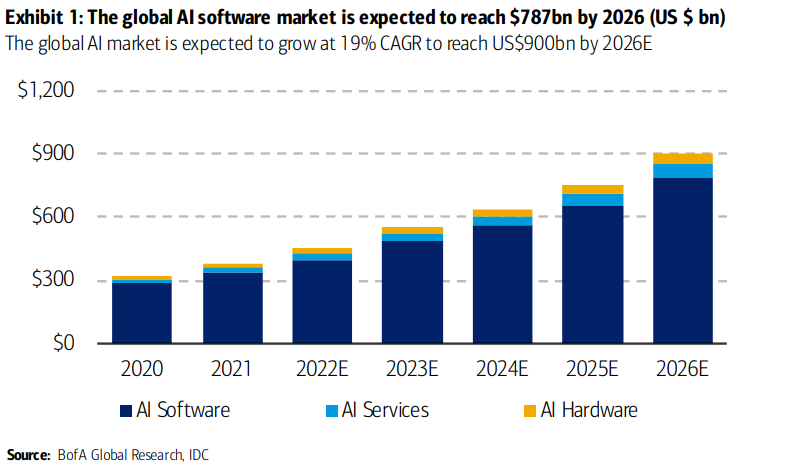

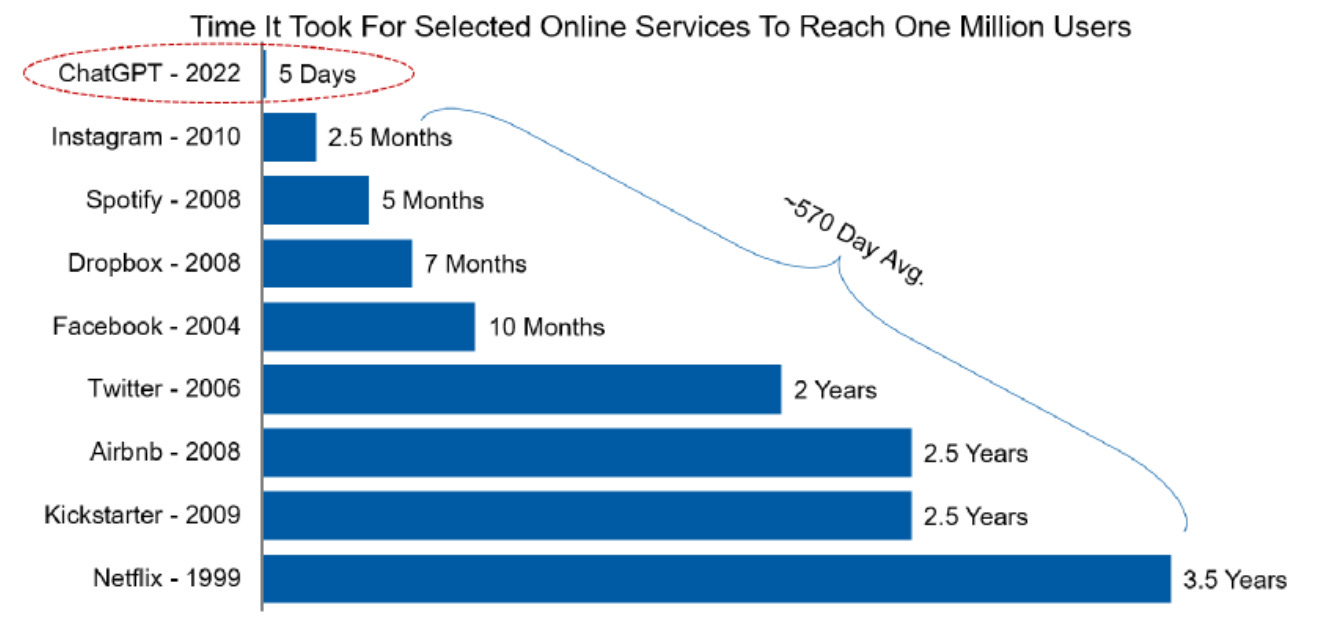

I can’t say I blame you, I mean…look at this:

Certainly, being right here could be legendary. I think we can create a framework to begin analyzing some potential here. I like long/short in this area more than being flat out long or short – especially with the way the market surrounding this stuff has been acting recently. I think with the macro overhang in the market (or at least in the sentiment surrounding it) there’s a good argument for bringing down net exposure with companies that are less well positioned, being long the beneficiaries and finding companies that are less well positioned for a new paradigm in computing / software (long the winners, short the losers - if it were only that easy).

Even if you are not trying to predict the winners of Phase 3, forming a way to think about whether the businesses you currently own could experience a negative or positive impact in a period of rapid technological advancement has been universally beneficial for investors in the past. With the second & final installment in my holistic market analysis regarding this technology, I hope to provide the finishing touches to a primer that both enables you to find, evaluate and make decisions surrounding companies in the way that I have been benefitting from since I began adding exposure to this theme last year and provides you with some long, short and long/short pair ideas generated using this framework.

I have been pretty good at developing ways to think about how the market is processing themes. So let’s do some more of that.

I present a framework & a scoring metric for viewing sectors, subsectors and individual company fragility to AI/ML disruption, then create a list of 15-20 names in the most likely negatively affected sectors and how they may be implemented as longs, shorts or long/short pairs that capture the trend. Finally, I examine the best ways to play potential disruption in various sectors and also discuss some areas in software that resemble data center in being an “enabler” for the entire technology.

For specific questions within software and SaaS, Sophie presents how she is viewing who wins and loses.

Please click here for a spreadsheet of the tickers mentioned in this article.

Please click here for the short-only and long/short portfolios mentioned in this article.

Please click here to view this article as a PDF

Phase Three, Continued

Anticipating the Impact of AI/ML on Businesses

Citrini

The first tier will focus on Threats and Opportunities in AI, on a more top-down level focused on disruptions to the sector/subsector (i.e. does it provide a service that AI/ML makes transitioning to in-house easier or cheaper?). The second tier will focus on individual companies’ strengths and weaknesses as they relate to AI/ML, on a bottom-up approach that looks at whether they are positioned properly to take advantage of a new technological paradigm.

We have to attempt to sift through the companies who are attempting to make it look as if they are utilizing AI to gain an advantage, when, in fact, they are actually the ones losing it We will see many companies touting the benefits of AI/ML, the potential cost savings and efficiency just to end up spending more in CapEx than can ever be recouped by implementing it or offering it as a product.

Overall, some companies and products will experience price deflation as it becomes a matter of simply using AI tools to recreate their value proposition (think web applications, or, for a more recent example instead of future, thinks Chegg). Conversely, we will see companies effectively leverage AI to cut their costs dramatically. Data Utilization and Commodification will be a significant talking point in technology over the next five years. This document, to the most significant extent a generalist can hope, will attempt to discern which companies fit into which categories. Let’s first define AI again, so that we’re all on the same page.

AI encapsulates any aspect of data-driven automation. Despite ChatGPT fever, it's important to acknowledge that AI's presence in enterprise software isn't a novelty; it's been operational for a considerable duration. Machine vision, Machine Learning, predictive AI, generative AI, natural language processing etc. ChatGPT captured the hearts and minds of the public via its ability to create something completely novel – not a trait typically associated with computer code. The functionality of Generative AI is facilitated by intricate AI models, constituted of millions to hundreds of billions of parameters, utilizing artificial neural networks. These networks mirror the architecture and functionality of the human brain and are a subset of machine learning algorithms. User-guided Generative AI models are capable of crafting something entirely new, setting them apart from predictive analytics.

Something to remember in this article is that I’ll be talking here about AI in the context of both generative AI and machine learning. So I don’t want to get anybody on twitter telling me about how ChatGPT isn’t going to be replacing warehouse workers. Machine learning (& vision) will, eventually. What I’m doing here is essentially trying to bring that timeframe down to about 2-3 years and saying “how will machine learning, generative AI or deep learning have evolved and who will it have affected?”. So yes, if a company like Symbiotic or Rockwell Automation makes significant strides in costs and tech, maybe that becomes a consideration. But for right now I’m trying to lean more towards finding the next Chegg – that is, a company who is in danger of losing out on revenues in the next 12-24 months because of a feasible AI/ML solution.

In the evolving landscape of artificial intelligence, a comprehensive examination reveals the existence of three main tiers of generative AI utility, though the discourse often omits the most critical one. OpenAI's public models represent the first level, wherein the models provide substantial amounts of useful information. Complex inquiries are met with comprehensive and detailed responses. Text is turned into images, images are turned into text, data is classified in ways you would have previously had to hire a human in order to accomplish. Unlike predictive analytics/AI, generative models create something entirely new in a way that only humans were able to before it existed.

Public models offer access to the common knowledge pool, meaning anyone can tap into it. Take, for instance, a task like resume writing: the model can generate a resume superior to 95% of existing examples, thanks to its extensive learning from millions of resume samples and LinkedIn posts. If the data is available publicly, the barrier for creating a tool using it is lower and the tool must be of a higher quality to succeed. Companies who’s datasets are substantially similar to public datasets or are already public do not have a moat against AI.

The next level incorporates fine-tuned models, wherein Large Language Models, Diffusion Models, Computer Vision models, Multimodal models et cetera can learn and adapt based on personalized data and use cases. The process, albeit resource-intensive, bolsters the model's capabilities and offers a potent augmentation method. However, this isn't the sole approach. Ideally, organizations should leverage all three types: public models for generic tasks, private models for personalized applications, and the often-overlooked third method.

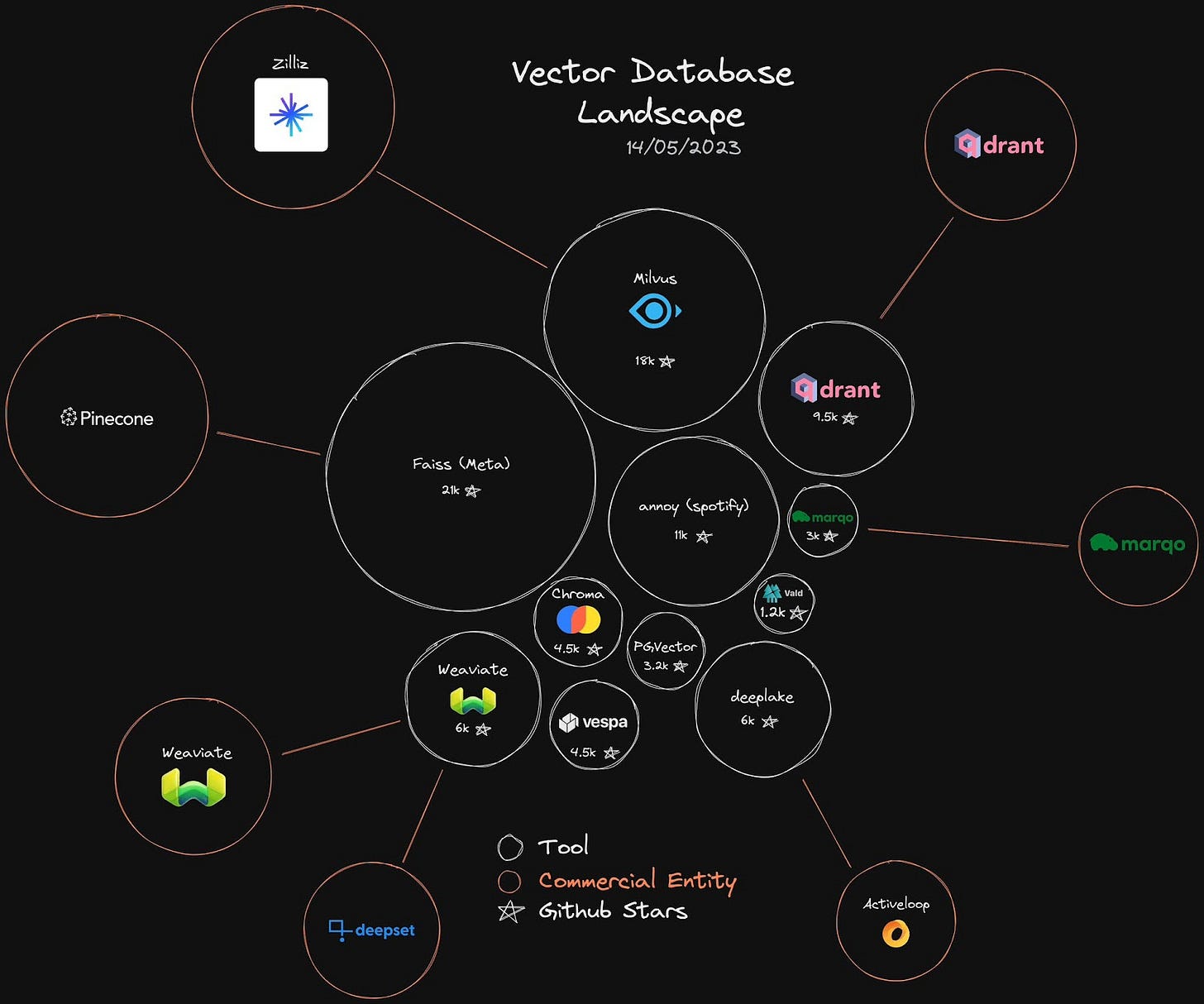

This less-discussed third method relies on transforming one’s own specific database into a series of vector embeddings, an approach forecasted to gain traction in the market. While it necessitates careful consideration, given the plethora of options available, it essentially involves numerical conversion of the data. This process groups similar entities together based on their numerical proximity in the vector space. By employing vector databases, organizations can relate queries to the most relevant documents in their own databases. The selected documents can then be used as prompts for the public models, preserving data privacy as no private data leaves the confines of the company. More on vector databases later, but as you can see - data is incredibly important to the training and success of AI/ML models. That is an important consideration when evaluating losers and winners.

The market, unfortunately, is riddled with hyperbole, as companies often repackage pre-existing products in an attempt to maintain relevance. Creating effective models is an arduous process, despite seemingly effortless demos. These models have the potential to disrupt entire industries and lift up companies with cost cutting that can be aptly compared to that generated due to the internal combustion engine. However, these benefits are further down the line and require substantial execution in order to obtain. The key to actualizing the potential of AI models lies in their integration into operational frameworks and datasets capable of generating results, preserving history, and spanning large organizations as well as being capable of affording the costs associated with training, inference and integration. If the field is too competitive, or the barrier to entry is lowered due to AI, potential winners may turn out to be losers.

Evaluating Vulnerability/Resilience in Industries and Subsectors

To get started, we’ll go from the sector level down. We’ll need to develop a rubric to address whether specific subsectors or business models are likely to face disruption or lack a moat against artificial intelligence and machine learning, and whether they will be able to implement and benefit from the opportunities it presents or will find themselves spending more and earning less due to the threats it represents. This will help to narrow down the field so we can utilize a company level framework. Some key considerations are:

1. Industry Threat

Less Barrier to Entry for Competitors: AI has the potential to lower the barriers to entry in many industries. This could increase competition and dilute market share for existing players. We should assess how vulnerable each company's industry is to new entrants facilitated by AI technologies.

Reductions in Volume Due to AI Tools: AI can automate many tasks, potentially reducing the need for some services. We should evaluate the potential for AI to decrease demand for each company's offerings.

Uniqueness & Fungibility of Dataset AI functions solely on data, without it machine learning is relatively useless. It trains itself on data and then refines that data throughout the process of inference. I was recently told “Data is only valuable when it is unique and constantly changing”. In other words, companies that have historical datasets in unique areas, which are difficult to approximate using other company’s datasets, have a moat from disruption due to AI/ML as they are afforded the extra time to use that internal data to integrate it into their solutions. Companies without significant or unique data are much more likely to be disrupted by developments in AI/ML.

Customer Base Data Sensitivity and Viability of Providing AI Services to Clients versus Clients Having to Train and Maintain Their Own Models on Their Own Datasets: AI's effectiveness depends heavily on data. However, data security and privacy are major concerns. We should examine the trade-offs each company and its customers face between outsourcing AI services and developing in-house capabilities, especially considering the sensitivity of the data involved.

2. Threat to Value Proposition

Pricing Deflation Due to AI Penetration: As AI becomes more prevalent, it could drive down prices in many industries. We should assess how each company's pricing could be affected by widespread AI adoption.

Potential for AI to Reduce Value Add via Increased Efficiency: AI can significantly increase efficiency, potentially reducing the value of some services. In some areas, as AI improves customer service capabilities, customers' expectations are likely to increase. We should evaluate how each company's value proposition could be affected by AI-driven efficiency gains.

Costs Associated with Integrating AI: Integrating AI into existing processes can be costly and disruptive. We should assess the potential costs each company could incur in integrating AI, including financial, operational, and cultural costs.

Ability to Integrate and Scale Across All Affected Vectors: Successfully implementing AI requires integration across all parts of a business. We should evaluate each company's ability to effectively integrate and scale AI solutions across their operations.

Adjacency to Artificial Intelligence Some companies may both suffer and benefit from AI tools, specifically consultancies focusing on digital transformation. We should avoid classifying as potential losers companies that could suffer as the industry faces potential threats from more advanced analytics but that also will be assisting companies with implementing their own AI solutions – at least in the beginning. Companies such as Accenture, Tata Consultancy Services, Capgemini and Genpact are in this category. On the other hand, a company like Chegg may attempt to implement AI but regardless of their success will face severely increased competition in their industry due to technology already having disrupted their model.

3. Legal and Regulatory Threats

Regulatory Compliance: With the rise of AI technologies, new regulatory standards are being introduced. We should assess how each company's operations might be affected by these regulatory changes and their ability to comply with them. This includes factors like data privacy regulations, AI ethics guidelines, etc.

Liability Issues: AI decisions can have real-world consequences, and it's not always clear who is responsible when things go wrong. We should consider the potential for increased liability issues with AI implementation and how each company is prepared to handle them.

4. Competitive Positioning

Differentiation in AI Capability: As AI becomes more prevalent, having a unique, effective approach can be a competitive advantage. We should assess how each company's AI capabilities or strategies differentiate them from their competitors within specific sectors that may be affected. While RELX has already begun the process of integrating artificial intelligence into the legal profession – including leveraging intellectual property law, LegalZoom has done very little and finds itself in a precarious position – LLC formation is a very easily automatable task with very publicly available datasets. AI/ML may eat into their margins as tech-savvy customers who have already demonstrated no necessity for the “human touch” find simpler and cheaper alternatives using artificial intelligence and GPT models to guide them through filing these documents on their own.

Partnerships and Alliances: Strategic partnerships can play a crucial role in gaining access to advanced AI technologies and expertise. We should evaluate each company's ability to form beneficial alliances in the AI space, including partnerships for data sharing, collaborative research, or technology licensing.

Need for continuous adaptation: The rapid pace of AI development means companies must continuously adapt and innovate. This can be costly and time-consuming, a difficult endeavor for a company already facing issues.

Customer Spending AI/ML spending by businesses may decrease non-AI capex spending (like Cloud, for example) and result in lower volumes and revenues for companies without attractive AI SKUs or who’s product can be taken in-house using AI

Need for high quality, market-leading & unique data Data is only valuable when it is unique and constantly changing. Companies that have amassed large amounts of historical data in areas where it is difficult to replicate (historically and going forward) have a moat against AI, or at least a valuable asset in the paradigm.

Sector Accessibility (Total: 35 points)

How automatable are the tasks within this sector by AI? (0 to 10 points)

How easily can AI lower the barriers to entry in this sector? (0 to 5 points)

Is there a high sensitivity of data in this sector, which could limit AI adoption due to privacy and security concerns? (-5 to 0 points)

How unique and constantly changing is the data that businesses in this sector can access? (0 to 10 points)

How extensive are the historical datasets that businesses in this sector possess? (0 to 5 points)

Has this sector already faced disruption due to technological advancements? (-5 to 0 points)

Value Proposition Threat (Total: 25 points)

How likely could AI cause deflation in the sector's product/service pricing? (0 to 10 points)

How might AI-driven efficiency gains disrupt the value proposition of services within this sector? (0 to 10 points)

What is the potential cost and disruption for the sector to integrate AI (financially, operationally, and culturally)? (0 to 5 points)

Regulatory Environment (Total: 20 points)

How prepared is the sector to handle potential liability issues related to AI implementation? (0 to 5 points)

How restrictive are current regulations for AI implementation in this sector? (0 to 5 points)

How might new regulatory changes around AI affect the sector? (0 to 10 points)

Market Dynamics (Total: 20 points) 13. How does AI technology offer differentiation within this sector? (0 to 10 points)

How capable is the sector to form beneficial alliances in the AI space? (0 to 5 points)

Does the sector have the ability to adapt and innovate continuously given the rapid pace of AI development? (0 to 5 points)

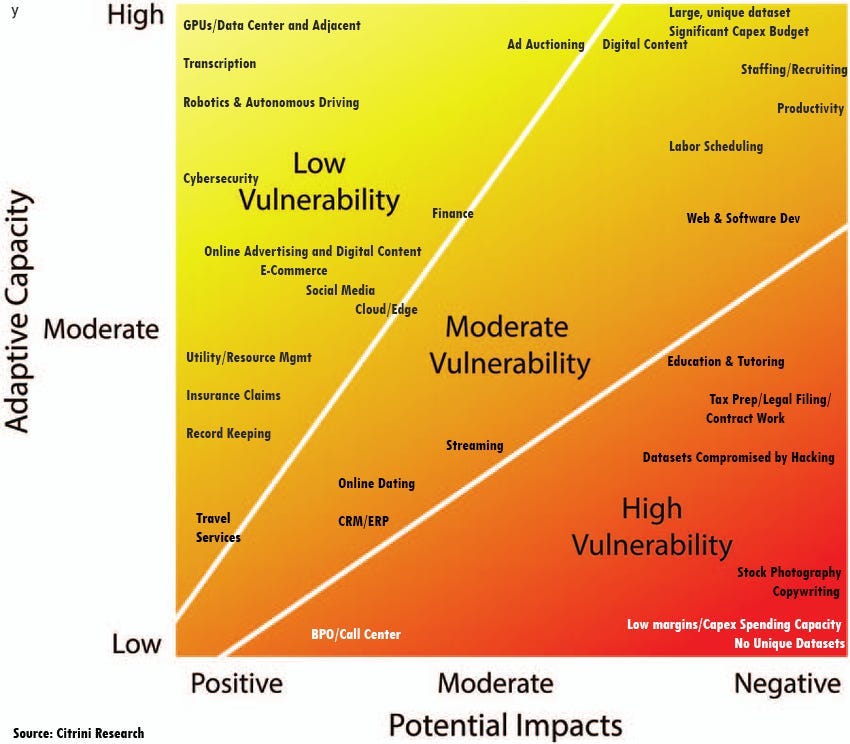

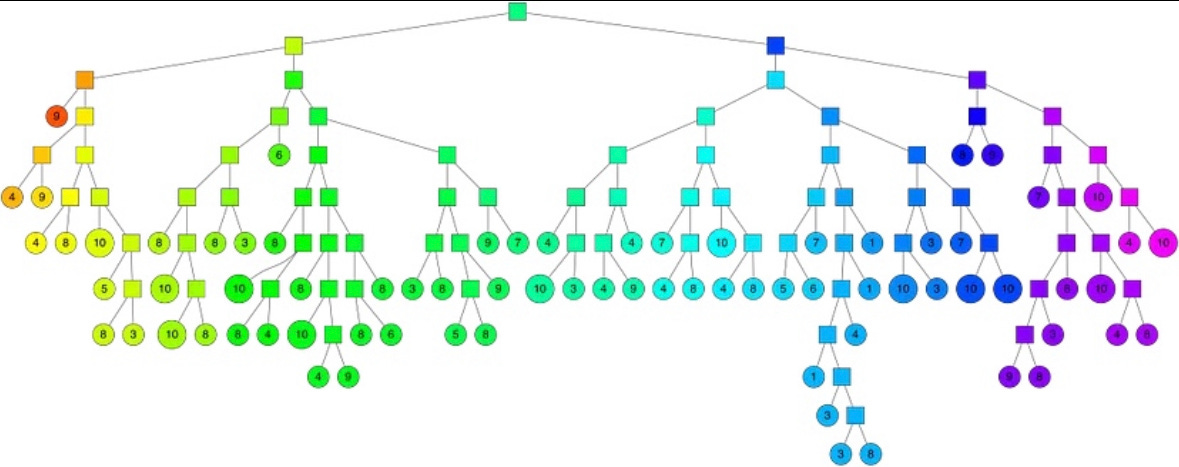

An initial, rudimentary framework still assesses a sector's susceptibility to AI disruption on a scale of 0 to 100, with a higher score indicating a lower potential for disruption. A score above 50 means it is very unlikely the sector will be significantly affected, 50 would indicate a potential disruption that may be controversial (as there are benefits in certain areas), while a score below 50 indicates a high potential risk. Additionally, I have included certain characteristics of companies that make adopting or competing with AI more likely/unlikely.

A Spectrum of AI Disruption, Risk and Benefit Across Company Characteristics

This provides an initial indication of what our broad framework will look like. We will need to get more granular based on use cases for AI to discover more sectors and determine whether controversial categories belong higher or lower. On an individual basis, capex spending, margins and similar factors for each company will be important.

The use cases for generative AI in software alone are significant:

Here is another, more dynamic way to view this:

Businesses are more likely to adopt due to competition in the current state of technology (chatbots, productivity, AI tools etc.). Now, let’s get a little bit more on the software side. Generative AI can have a significant and landscape altering effect on software – positive or negative. In my opinion, this effect will be more pronounced in things like web applications, no-code solutions, productivity software and similar. But overall, competitive Moat, sustainable growth potential, pricing power, TAM and gross profit margins are much more projections in software than other areas of the market. So, when something like generative AI comes that changes the assumptions those are based off of, it’s worth paying attention to what it changes. Already, AI is making DevOps look a lot different:

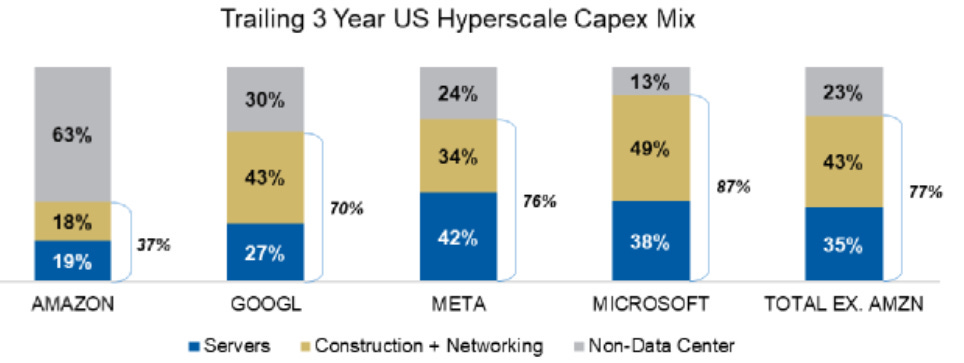

I think it’s worth reiterating what I said in the first piece that the simplest way to meet with success playing this theme is with the data centers. They will be the beneficiaries of the dogfight detailed here and the associated capex spending. However, I think it’s also important to have a framework to view developments in Phase 2 and 3 as they occur.

Evaluating Vulnerability/Resilience in Individual Companies

Individual Company Rubric

Positive Questions:

Does the company possess a market-leading and unique historical dataset in one or more of the industries in which it competes? - A rich and unique dataset can provide competitive advantages and better AI/ML implementation. 8

Does the company provide highly specialized solutions that are not easily replaced? – Highly specialized solutions result in highly specialized datasets, and are less likely to be disrupted.7

Does the company have a strategy to leverage AI/ML to improve user experience and satisfaction within core products, premium SKUs or as a standalone offering? - A clear strategy indicates a commitment to harnessing the power of AI/ML for user experience improvement.8

Does the company have a history of successful AI/ML implementation or integration in its software solutions? - Past success in AI/ML usage demonstrates competency and reduces the risk of future implementation.7

Has the company mentioned AI/ML in earnings calls, conferences, presentations, press releases or materials prior to December 2022? - Public acknowledgment of AI/ML usage suggests awareness and dedication to this technology.6

Does the company have a team or department dedicated to AI/ML? - A dedicated team shows the company's commitment to incorporating AI/ML into their operations.6

Does the company offer market-leading solutions tailored for specific verticals? - Market leadership in specific verticals shows industry dominance, which can be reinforced by AI/ML.5

Does the company have sales infrastructure that is resilient and insulated? - A robust sales infrastructure can aid in the effective marketing and distribution of AI/ML-enhanced products.5

Does the company have an expected NTM R&D budget exceeding $500m (or 15% of projected capex)? - A significant R&D budget implies a commitment to innovation, potentially including AI/ML.5

Does the company have high enough gross margins and/or a strong enough cash position to handle capital and operating expenditures associated with absorbing AI computing costs and scaling quickly? - Financial robustness can support AI investments and the associated expenses.5

Does the company’s AI/ML offerings or announced roadmap enhance its ability to expand pipelines at the top of the funnel via freemium versions? - This shows an intention to use AI/ML to attract more users and potentially convert them into paying customers.4

Does the company have international reach in many other languages than English? - This could signify a global user base which could provide diverse data to improve AI/ML algorithms.3

Has the company made significant investments in AI/ML research and development? - Major investments indicate a company's commitment to exploring and adopting AI/ML technologies.5

Does the company have existing strategic partnerships or alliances in the AI/ML space? - These can fast-track the company's AI/ML advancements through shared knowledge and resources.4

Have these partnerships existed for longer than a period of one year? - LHas the company filed patent(s) related to AI/ML? - Patents could indiong-standing alliances suggest stability and potentially successful collaboration on AI/ML.2

cate a company's innovation in AI/ML and its ability to protect its intellectual property.2

Does the company offer a competitive advantage through AI/ML in their solutions compared to competitors? - AI/ML could provide a competitive edge through superior products or more efficient processes.5

Does the company have a multi-faceted monetization opportunity? - Multiple revenue streams could provide stability and increased potential for profit, allowing more investment into AI/ML.5

Does the company have a market-leading customer base in one or more of the industries in which it competes? - A significant customer base provides a large market for AI/ML-enhanced products or services.5

Is the company's customer base tech-savvy and likely to adopt AI/ML-driven solutions? - Tech-savvy customers are more likely to adopt AI/ML solutions, increasing the likelihood of successful AI/ML integration.4

Negative Questions:

Does a majority of the company's customer base consist of consumers rather than businesses? - Business customers might be more willing to pay for sophisticated AI/ML-enhanced products or services than individual consumers.-7

Is the company more likely to have higher levels of disintermediated data relative to peers due to a history of M&A? - This could lead to inconsistent or low-quality data, hampering AI/ML development.-5

Do the company’s offerings facilitate transactions (as opposed to workflows)? - Companies that focus on transactions might be less likely to benefit from AI/ML, which can be more beneficial for workflow optimization.-5

Does the proliferation of generative AI have the potential to depreciate the company’s total addressable market via seat count, margin or pricing power deterioration? - If AI capabilities become widely available, it could reduce the uniqueness of a company's offering.-6

Is the company heavily reliant on a single or a few major customers who are hesitant or slow to adopt AI/ML solutions? - This could slow the company's AI/ML progress and limit the benefits it gains.-6

Does the company's current technological infrastructure support the integration of AI/ML capabilities? - Without this support, the company might face technical obstacles to AI/ML implementation.-5

Is the company's regulatory environment restrictive towards the use of AI/ML technologies? - Regulatory constraints could limit a company's ability to implement AI/ML.-5

Is the company facing talent shortage or high turnover in the AI/ML department? - This could delay AI/ML projects and limit the company's ability to innovate.

Are the company's AI/ML initiatives driving up costs without corresponding increase in revenue? - If AI/ML is not leading to increased profits, it might not be a worthwhile investment.

Does AI/ML potentially lower the barrier to entry for competitors? Increasing competition in a highly VC funded area is a threat. -5

Does the company face significant competition from companies already excelling in AI/ML? - Strong competition could reduce the benefits the company gets from its own AI/ML efforts.-5

Do other companies possess datasets that are of similar quality, specificity and value to the company’s data? - If competitors have similar data, this could diminish the company's competitive advantage in AI/ML.-6

Has the company encountered any significant security breaches that compromised a majority of its historical data? - Security breaches can damage the company's reputation and the quality of its data, hindering AI/ML development.-5

Is the company’s cost of capital higher than 10%? - A high cost of capital could limit the company's ability to invest in AI/ML.-4

The question of profit margins and capex potential is especially potent in software, where reinvesting into growth is the key point. Walking a fine line between “all-in on the latest hype” and “corporate FOMO” could be key. Morgan Stanley shows what looks like an inflection point. More than half of the respondents are increasing their AI implementation but there’s an even split on increasing spending. Will the ones who get aggressive now end up winning? That’s unclear, but the ones that have the option to are certainly much, much better positioned.

Companies with significant R&D resources are ideally positioned to leverage AI/ML opportunities, as these resources allow for the development of proprietary AI models, talent acquisition, and data engineering investment. The development of such unique AI models can bolster a company's competitive advantage, particularly for larger firms with an established AI reputation, whereas smaller vendors may find more strategic value in utilizing third-party models. Furthermore, attracting elite AI talent often depends on a company's AI leadership and reputation, with top-tier AI experts generally favoring well-established firms with greater financial means. Some software companies that will spend more than 500m on R&D in the next year are (in order of size): DataDog, Twilio, Unity, Autodesk, Shopify, ServiceNow, Workday, Intuit, Adobe, Salesforce, Oracle, Microsoft.

I believe this could be the kind of golden opportunity for long/short investing that allows you to keep on a pair trade for a very long time (think…long Amazon short Etsy?). I’m going to suggest a few potential pairs at the end of this (although they may not be suitable to put on right this moment, they’re worth keeping on your market minder).

I think it’s important to look into whether companies are being reactive– just mentioning this for the first time now that ChatGPT is in the public zeitgeist – or proactive (having prepared for this). Here’s the CEO of YEXT talking about the kind of difference that makes:

Potential Standout AI Losers (Score < 30)

Standalone Shorts: The AI Losers

Using our earlier framework I narrowed down a universe of about 150 names, then scored them. Here’s the names that scored the lowest which may be worth avoiding as longs due to the negative effects of AI or potentially shorting, provided there are other justifications:

BPO/Call Center Basket: Conduent, Teleperformance, IBEX, TaskUs, Concentrix: Companies like Conduent, Teleperformance, Concentrix, Startek, IBEX, and TaskUs provide outsourced customer engagement and business process outsourcing (BPO) services still heavily reliant on human agents. They have large workforces handling customer service, technical support, and back-office functions through call centers, email, and chat.

These companies have limited proprietary AI offerings for automation and intelligent virtual agents. They lag technology firms in developing conversational AI, natural language processing, and other automation tools that could mitigate the need for human agents over time.

As AI capabilities in areas like voice and language continue to rapidly advance, more responsibilities handled by call center reps and BPO staff could be automated. This could substantially reduce demand for some outsourced services.

Conduent (CNDT) - Provides business process services including customer care, transaction processing, and finance & accounting functions. Known for large call center workforce. Minimal AI products for automation per company website.

Teleperformance (TLPDY / TEP FP) - Major call center operator handling customer service, technical support and other functions for clients. Core competency in managing large human workforces globally. No marketed AI offerings.

Concentrix (CNXC) - Leading global provider of outsourced customer engagement services. Partners with technology firms on some automation but lacks own proprietary AI solutions for intelligent virtual agents.

IBEX (IBEX) - A business process outsourcing provider for the customer lifecycle. Lifecycle services remain human-intensive. Does not promote AI automation offerings to reduce reliance on agents.

TaskUs (TASK) - Offers a range of customer experience and content security services to technology companies. Some partnerships with AI firms but no proprietary automation solutions marketed.

The key is identifying BPO/call center providers that have limited in-house AI capabilities and still depend largely on human capital, making them vulnerable to displacement by automation and generative AI. The bottom line is that the BPO/Call Center industry is going to be significantly negatively affected by an exponential rise in the capabilities of artificial intelligence - even if one of these companies is able to consolidate market share in the few niche areas where human capital is still necessary in 5 years, the likelihood of the market being significantly smaller overall is high.

TripAdvisor (TRIP): AI-driven personal assistants could provide travel recommendations and bookings directly, potentially reducing the need for users to visit travel review and booking sites like TripAdvisor. TripAdvisor’s dataset is not as robust as Expedia or Booking and it does not have the kind of capital required to compete in what’s already an area AI is being used in. Expedia already has a chatGPT plugin and Booking.com has been implementing and utilizing data collection, NLP and, more recently, GPT internally and in their customer facing search results. See the section below on long/short pair trades to capture the success of AI-capable companies (like BKNG) relative to AI-naïve companies (like TRIP) for more information on one implementation of this trade, but I think TRIP should make for a good standalone “AI Loser Short”.

LegalZoom (LZ) and CS Disco (LAW): AI-powered legal tech platforms could automate many of the services that LegalZoom provides, such as creating legal documents, potentially reducing the demand for their services. LegalZoom and CS Disco are essentially “template filling services” that can be replaced by non-AI tools, but with generative AI it may be extraordinarily easy. Remember, LegalZoom disrupted the legal profession (in business filings and contracts) - disruptors get disrupted.

Chegg (CHGG), Pearson (PSO), and Scholastic (SCHL): AI could disrupt the education and publishing industries by personalizing and streamlining lesson plans and learning, potentially reducing the need for traditional textbooks and study aids. Chegg has already detailed why it will suffer, and for the same reason it is worth looking at Pearson and Scholastic (although not as immediate with the chatGPT vs. Chegg tool, AI certainly can threaten their business models). In order to avoid disruption, the business model would have to include a product that is incredibly sticky and customers that are very loyal so that they do not leave as AI increases competition but rather stick around as AI improves the core offering. A classic example of a business like this would be Duolingo (DUOL), which I think could provide a very good option for a pair trade on this theme against SCHL or PSO.

Shutterstock (SSTK) and Getty Images (GETY): AI technologies could create synthetic images or alter existing ones, potentially reducing the demand for stock photos and other digital assets these companies provide. Shutterstock was considered a beneficiary early on, as companies like Stable Diffusion, Midjourney and Dall-E were expected to use their photos to train their models. However, the models are pretty much trained. And now I can generate any stock photo I want on midjourney for $8 a month. The only thing keeping Shutterstock alive is a) a general unawareness of how to best use these tools and b) an unclear aspect of intellectual property.

Wix (WIX) and Squarespace (SQSP): AI-driven website builders could automate the process of website design and maintenance, potentially reducing the need for these platforms. No code and low code solutions in general can face fierce competition from AI.

Etsy (ETSY): AI could automate many aspects of e-commerce, including inventory management and customer service, potentially reducing the need for e-commerce platforms like Etsy. Amazon’s ecommerce platform benefits significantly from existing AI tools and Shopify has been eager to focus on AI initiatives, Etsy have not.

Fiverr (FVRR) and Upwork (UPWK): AI could automate many of the freelance tasks available on these platforms, such as graphic design or content creation, potentially reducing the demand for human freelancers.

Manpower Group (MAN) and K-Force: AI could automate many aspects of the recruitment process, such as resume screening and initial interviews, potentially reducing the need for traditional recruitment agencies. While this is most likely a further off development, I believe shorting these companies levered to the business cycle via employment is a prudent move with unemployment at secular lows and rates having been raised significantly.

Monday.com LTD (MNDY) and Asana (ASAN): AI could automate many project management tasks, such as task assignment and progress tracking, potentially reducing the need and barrier for entry for productivity project management software.

Smartsheet (SMAR): AI could automate many of the tasks currently done using spreadsheet software, such as data analysis and reporting, potentially reducing the need for platforms like Smartsheet.

Five9 (FIVN): As AI becomes more sophisticated, it could automate many of the tasks currently performed by call center agents, potentially reducing the need for call center software like Five9.

Expensify (EXFY): AI could automate expense management, including receipt scanning and expense categorization, potentially reducing the need for expense management software like Expensify.

Yext (YEXT): Yext's legacy knowledge management software for site search, listings, and business information faces disruption as AI search algorithms grow increasingly sophisticated. AI models can answer queries directly without traditional metadata and optimization. As search shifts from matching keywords to conversational AI assistants that can respond with accurate entity knowledge, Yext's structured data approach loses relevance. Lacking deep AI expertise or data assets, Yext cannot keep pace as machine learning commoditizes and replaces knowledge management capabilities, causing its products to become redundant. Yext is one of the names that has been bid up recently in misguided AI hype that truly has no clue what it is doing or buying - it provides a very attractive short entry.

Expedia’s ChatGPT Plugin: Most people do not yet know even that these plugins exist, which kind of shows how far public adoption has to go. Only 12% have used them!

The latest update to the AI Long/Short basket can be found here:

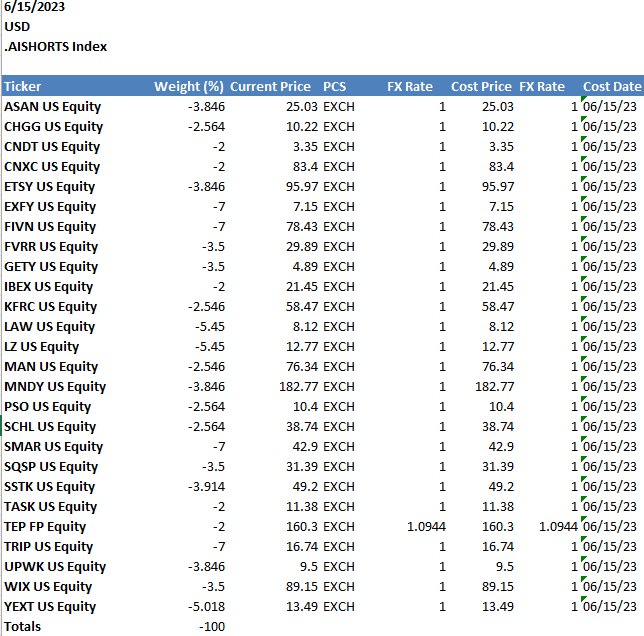

An example of how the standalone short basket would be weighted:

Relative Losers and Winners

Long/Short Ideas for Relative AI/ML Impact

Some people I speak to are very concerned about the stock market crashing, or how bad the macro is. To them I have said “why not just be short the index and then long a bunch of AI names”. Perhaps, the better answer is “why not try to predict who wins AI and who loses (with effects on earnings within the next quarter or two) and go long/short those”. That seems a lot more tricky, so I don’t recommend it over the first option but if you wanted to here are some ideas I think would be worth looking into:

Long Bill (BILL) vs. Short Paychex (PAYX)

Bill.com is a leader in applying AI for automated back-office financial workflows including accounting, bill payment, invoicing and cash flow management. It leverages intelligent document processing and predictive analytics trained on large volumes of accounting and payments data. This allows Bill.com to optimize tasks like reconciliations, reporting, and forecasting in an automated fashion.

In contrast, Paychex provides traditional payroll, HR, and benefits administration services for small/medium businesses. It relies heavily on conventional software systems and human-driven processes to manage payroll and accounting functions. Paychex has been slow to integrate cutting-edge AI capabilities despite the need to automate complex financial workflows.

Bill.com's AI-enabled automation delivers significant efficiency gains, error reduction, and insights relative to Paychex's approach. Its platform also benefits from data network effects - as more customers use it, the AI models improve further. This powerful automation and scalability is attracting customers away from labor-intensive incumbents like Paychex.

Additionally, Bill.com's AI unlocks new revenue opportunities through value-added services like cash forecasting, scenario modeling, and tailored recommendations based on predictive analytics. Paychex lacks comparable high-margin offerings derived from AI's predictive and analytical capabilities.

Paychex relies heavily on traditional software systems and manual processes that are ripe for disruption by intelligent automation while Bill.com's large datasets allow them to train AI models for predictive analytics and recommendations that optimize financial workflows in ways Paychex cannot match.

By reducing labor costs and errors while unlocking efficiency gains, Bill.com's AI solutions pose a direct threat to take market share from legacy vendors like Paychex still relying on human capital.

Paychex will need to invest heavily just to catch up on AI as capex and R&D spending has slowed, while Bill.com extends its technology lead and benefits from increasing returns to scale.

Financial process automation is a $20B+ TAM being transformed by AI - Paychex's exposure and lack of comparable AI expertise makes them vulnerable relative to AI leaders like Bill.com.

As AI becomes table stakes for streamlining complex financial processes, Bill.com's leadership position, robust AI capabilities, and intelligent services provide strong upside potential and an edge relative to legacy vendors like Paychex still relying on human capital over AI capital. This competitive dynamic creates an attractive asymmetry to benefit Bill.com's growth and valuation multiple over Paychex.

BILL, PT: $175, PAYX, PT: $85

Long CCC Intelligent Solutions (CCCS) and/or Guidewire (GWRE) vs. Short Lemonade (LMND)

CCC Intelligent Solutions (CCCS) provides software and AI-powered analytics for the P&C insurance industry. Guidewire (GWRE) is increasingly incorporating AI and machine learning capabilities into its property and casualty insurance software solutions. These platforms integrate data from insurers, automotive OEMs, and repair shops to optimize claims processing and accuracy. I like this area of AI-analytics software providers for insurance as a “picks and shovels” play, while I strongly dislike Lemonade’s (LMND) business model (regardless of AI or not).

The Long Case:

CCC has accumulated over 1 billion insurance auto claims to train its AI for tasks like fraud prediction, automated damage assessment, and subrogation opportunity identification.

CCC uses computer vision on photos and deep learning on unstructured text notes to automatically extract repair operations and costs rather than relying on manual review. Machine learning optimizes CCCS's delivery of cloud services by predicting infrastructure needs and preventing outages.

Guidewire is using AI for predictive analytics - its InsuranceSuite can analyze past claims data to predict future loss patterns, informing pricing and reserving. Guidewire is using AI internally to optimize its cloud infrastructure utilization and costs.

AI gives these solutions providers a significant accuracy advantage in areas like claims adjustments over competitors and saves insurers processing costs. They are the picks and shovels of the insurance industry embracing machine learning and artificial intelligence as it scales.

Solutions providers like CCCS/Guidewire have massive datasets, deep insurance expertise, and extensive capital to invest in AI.

They can leverage AI for product pricing, claims automation, fraud detection - saving insurers money.

AI software augments rather than displaces insurance jobs, making adoption easier. AI models provide risk scoring to support underwriters and claims teams in decision making. This helps improve loss ratios.

Computer vision AI is being applied for faster processing and analysis of photos/videos from claims sites to quickly assess damage and claims.

Guidewire's apps currently utilize NLP interpret adjustor notes, claims descriptions, and other text data to surface insights and in Chatbots embedded in Guidewire's user interfaces to handle common customer/agent inquiries. They have the capital to enhance this using GPT.

Additionally, Guidewire Marketplace offers insurers add-on AI apps for hyper-personalization, predictive modeling, data enrichment, and intelligent automation from AI partners.

As AI becomes more critical for insurance carriers, demand for CCC/Guidewire solutions will accelerate.

The Short Case:

Lemonade is an insurtech upstart competing against incumbents.

It lacks the massive datasets to train AI algorithms compared to long-time players. It is also suffering from the weaknesses in artificial intelligence as it attempts to fully automate the claims process, which leaves it exposed to shortcomings in existing AI models.

Lemonade's automated, app-based model aims to displace jobs, facing adoption barriers. Large carriers partnering with CCC/Guidewire possess far more resources to invest in AI, allowing a smoother glide path for integration, while Lemonade faces the “technological debt” of any early adopter in which it cannot keep up with innovation because it has already built out its offering with technology that is being rendered obsolete.

As AI becomes a requirement, Lemonade's technology gap could be exposed. Open sourcing of models like GPT lowers barriers for new entrants and speeds commoditization. Lemonade's tech advantage could diminish faster. GPT's availability lets incumbents tap advanced language capabilities at low cost to enhance customer experience and close any AI gap with Lemonade.

Given CCC's years of lead time in aggregating claims data and developing AI models for insurance workflows, there is a bit of a gap. CCC's differentiated AI capabilities for reducing claims costs create durable competitive advantages. Guidewire focuses on back-office functions and has not commercialized AI specifically for the critical claims process like CCC, but it could easily fit into an AI-enabled P&C process with its Salesforce integration and cloud-based delivery.

CCCS and Guidewire, as the likely “picks and shovel sellers” of any Insurance industry AI adoption, do not face the myriad risks associated with attempting to implement an entirely new tech model for underwriting like Lemonade does. Potential losses in a negative scenario for Lemonade are huge, while the reward is minimized by increased competition as AI becomes more ubiquitous and open source (rendering most of its supposed “lead” negligible"). Potential losses for CCCS and GWRE are not all that significant, they are already utilizing AI to some extent and can only reap upside by implementing more in an effective manner - yet if insurance remains reluctant to adopt this new technology it is unlikely they see much in the way of negative impact. On the upside, however, they retain most of the optionality of providing these solutions as AI becomes more widespread.

Long Infosys (INFY) vs. Short BPO/Call Center Basket (described above)

Infosys has established itself as an AI leader among IT services firms, leveraging capabilities like intelligent automation, machine learning, and natural language processing to drive digital transformation for clients. As a major provider of business process outsourcing, Teleperformance remains dependent on large workforces to conduct manually intensive tasks from customer service to content moderation.

As AI capabilities in language, speech, and vision advance, Teleperformance's offerings are more susceptible to disruption from intelligent automation than Infosys' consultative services. Infosys' IP and expertise in AI positions it to consult enterprises on automation strategies that reduce reliance on outsourcing and labor arbitrage. Teleperformance's human capital-driven model faces shrinking addressable markets as AI replicates more outsourced functions.

This divergence presents an opportunity to gain exposure to an AI winner with proven capabilities while shorting a firm lacking differentiated AI assets. Infosys is poised to expand its transformation consulting as clients adopt intelligent automation, while legacy BPOs like Teleperformance face displacement threats.

Long Pega Systems (PEGA) vs. Short Appen (APX AU)

Pega Systems’ (PEGA) applies AI across its customer engagement, templatization and digital process automation platform. PEGA’s tools increase efficiency and conversion rates, and will also see potential upside due to digitization as companies attempt to move massive and valuable datasets with increased haste onto the cloud so they may derive a competitive advantage via AI/ML. Compared to Appen's reliance on labor cost arbitrage. The growth of synthetic training data from AI reduces need for human training data annotation services provided by Appen.

Pega provides AI-powered customer engagement, workflow automation and decisioning software used by large enterprises to optimize operations. Its real-time predictive capabilities driven by machine learning give it an edge over rules-based systems. Pega spent over $400 million acquiring AI startups to augment its offerings, including Qurious.io and Everflow, and has significant relationships with Google and Celebrus. Its solutions directly compete with manual processes and legacy software.

Appen, meanwhile, relies heavily on a human crowd-sourcing model to provide training data for AI applications. As synthetic data generation and unsupervised learning advance, the need for manual data annotation declines. Appen also faces competition from tech giants offering their own data labeling services. It risks displacement as its human-intensive offerings are replicated by AI. It’s business model has an expiration date, as eventually LLM’s will be able to clean data equally as effectively as crowdsourced humans.

This presents an attractive asymmetry. Pega's tools focus helps large companies automate workflows and improve customer service - its solutions directly benefit from advances in machine learning. Appen's low-tech human labor approach is at risk of obsolescence from the same AI progress. As next-gen AI sees greater adoption, the gap between AI leaders like Pega and companies lacking AI IP like Appen should widen.

Long Amazon (AMZN) vs. Short Etsy (ETSY)

Amazon has vast data advantages to train advanced AI for ecommerce operations and offerings compared to niche players like Etsy. The massive volume of customer transactions, browsing data, seller data, and supply chain flows available to Amazon allows it to develop highly accurate predictive models for recommendations, forecasting, pricing, fraud detection, logistics optimization and more leveraging techniques like deep learning.

These huge and diverse data assets are extremely difficult for smaller ecommerce providers to replicate. Additionally, Amazon's technical resources for developing advanced AI and machine learning are far greater than Etsy, given Amazon employs thousands of top engineers and scientists while the others have small teams.

Amazon's leadership in AI-driven automation also allows it to reduce costs and offer the most competitive prices and Prime delivery speeds, outpacing the operational efficiency of competitors still relying on manual processes who cannot match Amazon in personalized recommendations, predictive analytics, inventory optimization or automation. This is likely why Shopify abandoned its logistics ambitions - a smart move that will not result in spending money against Amazon (who will always win in a spending fight).

Long Booking (BKNG) and Expedia (EXPE) vs Short TripAdvisor (TRIP):

Booking and Expedia leverage large volumes of travel data to personalize recommendations and predictively price listings via AI. TripAdvisor lacks comparable assets.

AI chatbots lower customer service costs for Expedia/Booking versus TripAdvisor's reliance on call center agents and high labor costs.

TripAdvisor depends heavily on ads and promotions to drive traffic. Expedia and Booking use AI-driven SEO and dynamic pricing to attract consumers more efficiently.

Differing View:

Inevitability Research

dissents on the implementation of this trade, preferring to be long Booking and short both Expedia and TripAdvisor.

Long Workday (WDAY) vs. Short Monday.com (MNDY)

Workday offers complete HR and financial management suites optimized for machine learning and AI. Monday.com is a more basic customizable work OS lacking integrated intelligence.

Workday's full enterprise dataset allows training of predictive analytics and modeling not possible with Monday's fragmented information.

Workday will benefit more from intelligent process automation displacing financial tasks while Monday is exposed to consumer no-code tools.

Long RELX (RELX) vs. Short CS Disco (LAW)

RELX's massive repository of legal, scientific, and medical content is far superior for training AI applications compared to CS Disco's small litigation dataset.

RELX can leverage AI for legal analytics, research, eDiscovery and other services while Disco simply does search for cases.

Disco's valuation prices in AI potential that RELX can actually realize given unparalleled content assets.

Long PureStorage (PSTG) vs Short Nutanix (NTNX)

Pure Storage's FlashBlade and AIRI solutions are purpose-built for AI, providing high performance and scale for large datasets and intensive workloads. Nutanix HCI is more general purpose.

Pure Storage excels at handling the volume, velocity, and variety of data that AI applications require. Nutanix provides more balanced capabilities.

Pure's all-flash storage can deliver higher throughput for rapidly processing AI data compared to Nutanix's architectures that still incorporate disk.

Pure's AIRI integrates tightly with NVIDIA GPUs and InfiniBand for ready-to-deploy AI infrastructure. Nutanix GPU support is looser.

Nutanix provides greater flexibility to run AI alongside other workloads and simplify management. Pure is more AI-centric.

For intensive training or inferencing at scale, Pure Storage appears better positioned to handle AI-specific demands. Nutanix offers more general data center consolidation.

Long Informatica (INFA) vs Short SAP

As a dedicated data integration platform, Informatica offers greater capabilities to prepare, cleanse and manage data for AI/ML.

Informatica CLAIRE engine allows auto-generation of data pipelines. SAP relies on manual mappings and coding.

Informatica focuses solely on data management while SAP splits focus across apps, databases, analytics etc.

Long Accenture (ACN), short Genpact (G):

Accenture employs significantly more AI talent and invests more heavily in AI capabilities than Genpact.

Accenture leverages industry data from consulting clients to develop vertical AI solutions with higher margins than horizontal BPO services.

Demand for Accenture's AI transformation services should see stronger growth than Genpact's more labor-driven BPO model.

Long Datadog (DDOG); short Splunk (SPLK):

Datadog provides monitoring/analytics for cloud-native workloads powered by AI, whereas Splunk is focused more on legacy IT data. As AI utilization grows, Datadog's solution could see wider adoption vs. Splunk.

Long Innodata (INOD) & Dropbox (DBX) / short DocuSign (DOCU):

Innodata is making a significant push to provide services for companies looking to implement AI. Just look at who the first result is when you search “fine tune GPT model”:

Dropbox utilizes AI for features like search, security, and document workflows whereas DocuSign simply provides e-signature services.Dropbox's breadth of business data offers more potential to leverage AI across knowledge management use cases compared to DocuSign's focus.

Innodata benefits from increasing demand for data annotation for AI, while Dropbox aims to leverage AI to improve its core file sharing and collaboration products. DocuSign could face potential disruption from AI capabilities in process automation and document analytics. For example, AI-powered tools may be able to automate contract markup, analysis, and extraction - reducing the need for some of DocuSign's offerings.

AI enables Dropbox to meet broader enterprise needs and make its platform stickier than point solutions like DocuSign

Long Salesforce (CRM), short Alteryx (AYX)

Salesforce employs significantly more AI researchers and invests more in AI than Alteryx, it also has more capex spending capabilities to scale AI in Tableau. The analytical and data viz capabilities of gen AI will make it difficult for AYX to compete once scaled.

Salesforce's Einstein platform is infusing all of its CRM applications with AI capabilities that go beyond Alteryx's data prep and analytics tools.

The boost Einstein provides to Salesforce's margins and growth exceeds what Alteryx's niche AI use cases can deliver.

Long Quality CDN & Cybersec (PANW, CRWD, FTNT, NET); Short SolarWinds (SWI), OKTA, SentinelOne (S)

Leading CDN and cybersecurity firms are aggressively utilizing AI for things like predictive threat detection, automated response, and intelligent traffic management. Their platforms are purpose-built to leverage large volumes of security data.

SolarWinds, Okta and SentinelOne have experienced high profile security breaches recently, undermining confidence in their AI-driven protection capabilities versus more established vendors.

Palo Alto, Fortinet and CrowdStrike have robust datasets and dedicated AI teams to train cybersecurity models. Smaller firms lack comparable resources.

Cloudflare and Akamai have inherent data advantages in running global CDN networks. Their scale allows them to detect threats and optimize traffic delivery via AI in a way niche players cannot match.

As cyber attacks increase in frequency and sophistication, advanced AI becomes crucial for effective defense and resilience. This favors incumbents with more experience and data over upstarts.

Market share gains powered by AI provide avenues for superior growth and margins for CDN/cyber leaders relative to vendors with high-profile breaches and unproven AI capabilities. Like other trades, the leaders are longs because they have distinct AI-driven advantages to sustain growth and profitability in the security market while emerging vendors face steeper challenges to realize the potential of AI in this space.

Long Clarivate (CLVT) vs Short Udemy (UDMY)

Clarivate offers AI-driven analytics, workflows, and insights for scientific research. Udemy just aggregates basic video courses.

Clarivate has massive publisher and patent datasets for training AI algorithms while Udemy lacks proprietary data.

Udemy faces greater threat from AI-generated educational content while Clarivate's solutions leverage AI capabilities.

An Example of how the AI Relative Winners/Losers Long Short Basket might be weighted

Long Angi vs. Short Upwork and/or Fiverr (FVRR better as standalone short)

LLMs like ChatGPT can already generate content, copy, translations and other text-based services that freelancers on UPWK/FVRR provide. This could displace significant segments of their market. If automation replaces enough services on Upwork/Fiverr, it could depress wages for remaining human providers as competition increases. Angi's providers may avoid this pressure on earnings.

Basic coding and web development tasks common on UPWK/FVRR also face risk from AI code generation. Angi's services like plumbing and electrical work are not easily automated. Generative AI is likely to advance further, but they are already able to produce some of the creative outputs necessary for things like like logos, posters, ads etc that designers on UPWK/FVRR are hired for currently. It is not unreasonable to think they can do the entire job within a couple years. Angi's services remain sheltered from this AI threat vector.

While Angi could potentially use AI for better provider matching, the nature of the services being higher-touch manual work protects the underlying demand. Upwork and Fiverr face greater zero-sum risk of replacement from AI itself, with FVRR facing the most existential threat as it focuses on less advanced skillsets like graphic design and copywriting (hence the name, hire someone for “five dollars”).

Network effects - As AI displaces providers on Upwork/Fiverr, it could shrink their networks and pool of talent available for matching, compounding the impact. Angi's provider ecosystem could remain more stable.

Market expansion - By lowering costs, AI could enable Angi to expand into additional home service categories that have been prohibitively expensive using human talent alone up until now.

Business model risks - Upwork and Fiverr's transaction-based model could suffer disproportionately from automation versus Angi's subscription revenues. Angi has a dataset relevant to the economy, both locally and internationally, as well as valuable to homebuilders and contractors while Upwork and Fiverr’s data is likely to become deprecated and not worth very much. Amazon’s Mechanical Turk already dominates the area where Upwork and Fiverr could pivot in order to gain convexity in terms of earnings to artificial intelligence trends.

Offense versus defense - Upwork and Fiverr may have to invest heavily in their own AI just to defend their businesses, while Angi can use AI purely to expand its opportunities.

I like FVRR and UPWK better as a standalone short, and I would not include this play in the L/S implementation, but it’s worth mentioning as a possibility.

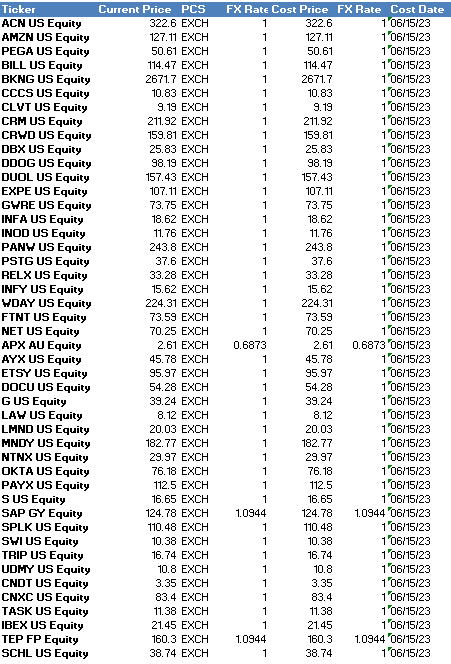

Here is the current AI Long/Short Basket I’m using from the above ideas.

It includes and excludes some ideas (in favor of running them as standalone shorts), but feel free to customize it yourself.

Winners and Losers in the Database

One more topic, not so much about losers but about what will enable the winners to be winners - it’s (yes, you guessed it) data. But more specifically, database.

Now, mongoDB (MDB) has gotten some AI hype and that’s fine. MongoDB is a document-based database system that is commonly used to store and retrieve data in various applications, including those in artificial intelligence (AI). MongoDB itself is not an AI technology, but it can be an important part of an AI system's infrastructure as the “system of record” for vector embeddings.

In an AI application, you might use MongoDB to store things like user data, model information, training data, or results of computations. However, the actual AI algorithms and models would likely be implemented using specialized libraries or platforms like TensorFlow, PyTorch, or Scikit-learn, which would then interact with the MongoDB database as needed. And the AI model is not going to interact with MDB directly in a way that results in more business for them, the data must have a vector embedding. A vector database, also known as a vector similarity search engine, plays a crucial role in many AI applications, especially in the domain of machine learning, where high-dimensional vectors are frequently used.

-UPDATE: I am adding this, which had not yet been announced when this article was being written: Introducing Vector Search in Azure Cosmos DB for MongoDB vCore for app developers looking to use AI capabilities.

And another one now with Google.1

A vector in this context is a numerical representation of an object (like an image, a text document, a sound, a user, an item etc.) in a multi-dimensional space. This representation is often created by machine learning models, such as deep learning models for natural language processing or image recognition. Used for unstructured and unharmonized data, the role of a vector database is to efficiently store these vectors and allow for fast search operations on them. The most common operation is similarity search, which means finding the vectors in the database that are most similar to a given vector. The only way you can return the exact thing you’re looking for is an exhaustive search. So, instead, data becomes vectors that can be substituted interchangeably if it is substantially similar.

For instance, in a recommendation system, user preferences and item properties can be encoded as vectors. When recommending items for a specific user, the system searches for items that have vectors similar to the user's vector.

Think of a vector database as a huge library, and the AI as the librarian. In this library, every book (data) is transformed into a special code (vector) and placed on the shelves. The librarian (AI) knows exactly where each book is and can quickly find similar books based on these codes.

The books could be anything, like pictures, sound, or text, which are all turned into a special code (vector) so they can be easily stored and found. The better organized the library, the quicker and more accurately the librarian (AI) can find what they're looking for, whether it's recommending a new book, finding patterns, or spotting unusual codes.

Traditional databases are not optimized for this kind of operation, and that's where a vector database comes in. Examples of vector databases include FAISS (developed by Facebook AI), kdb+ (developed by FD Technologies), Annoy developed by Spotify & some other non-up lox solutions - Milvus, an open-source vector database, for example, Zilinz and Pinecone. FAISS and ANNOY are open-source, but demonstrate that META and SPOT are capable in developing tools for vector database management & kdb.ai is a new product that is just being released for Q3 23. So while these companies aren’t making money directly from VectorDB, they are the best poised to eventually.

Vector embeddings are the only way to utilize and search modern databases (which are ABSOLUTELY MASSIVE). Therefore, you can’t use AI without them. They are integral in both generative AI and machine learning (especially keeping track of sensor data).

Vector Database Solutions (Long)

Meta Platforms META US

FD Technologies FDP LN

Spotify SPOT US

These three are good longs. Just from the same perspective as the data center stuff - building blocks and enablers.

In summary, while MongoDB or SQL databases deal well with structured data (like tables or JSON documents), a vector database is needed when working with high-dimensional vectors (embedding the data and creating “trees” by splitting the data into subsets that enable more efficient storage and faster, more precise searches, which is often required in AI application). For now, I think I have to classify MDB as “AI Hype”. That isn’t to say that it doesn’t benefit from this somehow down the road, but the idea that AI uses data and thus MDB will benefit is not quite accurate. There are better things out there, but I don’t think MDB is necessarily an AI loser.

To quote the CEO of KX (owned by FD Technologies - FDP LN):

“We live in a world where the majority of information that exists is unstructured (text documents, social media feeds, chat streams, images and video files etc.), but if we ascribe vectors to this information we can start to manage it in new ways across all industries. Vectors enable us to go as granular as we need to for any given subject matter and list several hundred attributes for a chosen data object. Vector databases simplify the encoding of many dimensions and temporal time-series information will always be key among those dimension attributes. What I mean is, humans can not make sense of information unless it has a time element - think about weather, health, military defense, transport or business, all decision-making comes down to timing.”

For the question that’s most relevant to the “AI Losers” discussion, I had to admit my shortcomings when it comes to software and ask for help, but it turns out that Sophie and I agreed on a lot of things anyway. My view looking forward is that I’m generally bullish hardware and bearish software due to AI, at least in the medium term.

Here are my priors:

AI/ML workloads are diverting budget from traditional servers towards specialized processors like GPUs. This is creating excess inventory of legacy hardware while fueling growth in AI chips and enablers of AI data centers.

Lower utilization of existing infrastructure due to efficiency gains means even less appetite for incremental CPU/memory capacity. Accelerating the shift of budgets to AI.

Failure of vendors to transition customers to new expensive platforms like Sapphire Rapids indicates focus on cost optimization, not the latest technology. Benefits AI processors on advanced nodes.

Meta keeping capex stable reflects prioritizing investment in AI over near-term reductions. Generative AI seen as major future opportunity.

Despite rapid market growth, AI inferencing and edge AI represent massive untapped opportunities beyond current training-focused demand.

Semiconductor TAM expansion will be driven primarily by AI, reaching >50% of current market size. Signals massive silicon content boost from AI workloads.

Given these trends, companies like PureStorage, Broadcom, Nvidia, and Marvell with leadership in storage for AI models, GPU/AI accelerators, and edge processors should be well positioned to see continued benefits relative to vendors like Nutanix, Intel, and Texas Instruments more exposed to legacy hardware vulnerable to AI disruption.

Sophie: Does “AI eat Software”?

sophie

So in terms of software, I’m thinking about it in terms of a couple of frameworks. This thought exercise is meant to try to understand how the trend may ripple through the software landscape, and I should mention that, in general, I am skeptical of the durability of most public software companies as they stand today.

My gut says that a majority of the value in “AI” will accrue to the incumbents (hyperscalers) and that it will be a brutally competitive environment for software companies in the coming years as the AI arms race heats up. For the purpose of this thought exercise, I’m looking through a skeptical lens, with the “base case” being that most, if not all, of the value in “AI,” accrues to the big companies that have been working in this space for a while (definitely pre this hype cycle, bonus points if they mobilized around the transformer paper). I’m not sure the market is completely aware of just how talented these research teams are at the big tech companies and the compounding effect they will have vs. competitors, as now companies are scrambling to build their own in-house research teams. This stuff is hard, really hard. And not just because it’s complex, but because the field is rapidly evolving every day & doing so in a manner that it’d be almost impossible for any one person to keep up with. a brief plug for my own substack, but in my work, I am interested in trying to discover “the inevitable.” In other words, through all the hype & smokescreens, there’s an underlying reality that’s inevitably going to unfold.

Of course, nothing is certain in markets, but I want to do my best to take a scientific approach to build a worldview and constantly test my hypotheses vs. reality. One thing that’s inevitable today is that OpenAI has a massive competitive advantage from commercializing ChatGPT. I do think that companies that are effectively using (modern) AI in products that they are shipping today have the chance to compound this advantage and create a kind of a snowball effect where they capture more and more value from an increasingly improving user experience (as a result of implementing AI) that scales faster than their costs. Companies that come to mind are Apple with the M2 (not only do they have the neural engine, but I would expect the chips to improve as they collect data & incorporate AI into the chip design process, a possible example of this compounding effect) Meta’s work in the open source community & Nvidia’s push to create vertically integrated software solutions on top of CUDA. I also wouldn’t sleep on Amazon or Google as they certainly have the data and talent to capture similar value during this arms race. That brings me to a couple of frameworks I’m using to consider how this may play out. And not software, but I do think that Long Fabless vs. IDMS & Memory works, for example:

Long Nvidia, AMD, Broadcom, Qualcomm, Marvell, MediaTek; Short Intel, Samsung, Micron, SK Hynix

Oracle, Adobe, Autodesk, VMWare are all likely to benefit as these tech providers are leveraging AI to enhance cloud, infrastructure, design, content, and database products. Large volumes of vertical data strengthen domain-specific AI models and use cases relative to non-AI enabled software vendors. Embedding AI delivers higher efficiency, lower costs, and new capabilities driving faster growth than competitors without AI.

General framework - whether it’s value accruing to a company that successfully implements ai or its costs scaling as a company attempts to catch up - does the relationship between cost and value scale linearly, quadratically, or exponentially?

Companies with high margins that can eat the cost of AI computing to provide a better experience vs. competitors & take market share in the long run (GPUs carry a significantly higher ASP versus general server processing hardware and tend to consume more power)

Companies with deeply entrenched customers/user bases that can offer similar services to a competitor or partner to upsell existing products & squeeze competitors.

Not specific, but look at what Microsoft did to Slack by bundling teams with the Office. A similar dynamic may happen where incumbents can bundle AI solutions & squeeze competitors.

Subscription-based companies with R&D capacity vs. usage-based models in pricing wars. i.e., if you have all this data growing 2^n, why won’t Google give you a sweetheart deal on BigQuery so you keep it all on GCP (undercutting Snowflake)?

Losers - lots of startups whose economics don’t work anymore once you consider the cost of computing for implementing AI models. I know it’s almost cliche, but “How are you going to compete with OpenAI?” is going to be a legitimate question for a lot of startups that are up against competitors that can offer whatever their product is as a loss leader or a bolt on to the incumbent’s existing product. You’ll need to do something genuinely novel at both the technology and business level to win as a startup in this rapidly evolving landscape.

The current economics favor the big companies that have already trained models and can afford the GPU bills. This advantage may even increase as inference & training become more of a continuous loop. These agents/models have long-term memories (this feels like another step function leap, having a personal assistant for your research, for example, that remembers & learns as you interact with it); there are some calls for a more robust ecosystem, one that’s more democratized (the “democratization of AI” is phase two in the framework I suggested in part one of this series -Citrini).

Daniel & Nat announced a GPU cluster for startups they’re funding.

Long companies with troves of valuable data on top of the service they enable vs. short commoditized data companies with no clear edge.

Legacy (“old”) companies with large (and sticky) install bases that can develop AI solutions on a timeframe in line with customer expectations (IBM?) vs. newer companies competing w more demanding customers & more competition for their business

Here is a more concise summary without bullet points:

Companies that focus on specific industry verticals can accumulate valuable domain-specific data assets. This data can be used to develop tailored AI models that provide unique insights and capabilities customized for that particular vertical. For example, a healthcare provider's patient records can train AI for precision diagnosis and treatment optimization.

In contrast, horizontal software aggregators like Salesforce, Oracle and SAP lack comparable access to concentrated data within a single vertical. Their platforms spread across multiple industries, limiting depth of data for any one domain. Developing AI tools for general use cases across many verticals dilutes their ability to achieve specialized accuracy.

The combination of concentrated data and industry network effects creates self-reinforcing AI advantages for vertical players that aggregators struggle to replicate. As they get more customers in one area, their expanding data compounds to improve AI capabilities, attracting more customers and data. This virtuous cycle strengthens niche AI companies' ability to gain market share through superior domain-specific insights relative to horizontal vendors' generic offerings. Focused vertical data is key to maximizing AI's potential.

I think S&P, FactSet, Shopify, Visa, MasterCard, Paycom, Oracle, VMWare, AutoDesk, Adobe,MongoDB are standalone longs.

I’m less certain on CME & ICE. Also, Adyen & Square. These may be longs.

I agree with Citrini’s comments in part one regarding being long the value chain for semiconductors enabling AI.. For example:

Long EDAs (Cadence, Synopsys, Siemens), Long semicap (ASML, Lam Research, KLA, Applied Materials, Tokyo Electron), long TSMC (and some of the other Taiwanese equipment providers and maybe even the Taiwanese dollar?!), Long networking (Arista, Juniper, Cisco, Broadcom, MaxLinear, etc.) Long data centers & equipment providers (Equinix, Super Micro, SGH Smart Global) also, some select equipment providers (Belfuse, SiTime, Fabrinet), and some packaging (CoWoS)/test companies as well (Amkor/ASE). Basically, a bet on the demand for computing products being secular/structural (if you believe demand for compute & bandwidth needs to scale quadratically, this probably works, works even better if demand scales exponentially for a time)